Summit User Guide

Note

Notable changes to Summit for 2024 allocations:

- Alpine Decomissioned (Jan 01, 2024)

Summit’s previous scratch filesystem, Alpine, was decommissioned on January 01, 2024.

- New Scratch Filesystem Available (Alpine2)

Alpine2, Summit’s new GPFS scratch filesystem is now available to replace the previous scratch filesystem. The new filesystem is mounted on Summit, Andes, and the DTNs. Returning users will need to transfer data onto Apline2 since data on the previous scratch filesystem is no longer available.

- Software Updates

Summit’s software has been updated. Returning users should recompile prior to running. A list of default software updates can be found on https://docs.olcf.ornl.gov/software/software-news.html . Please note the previous software stack remains available and can be accessed by loading the DefApps-2023 modulefile. For convenience, a DefApps-2024 is also provide to restore the most recent version of packages.

Summit Documentation Resources

In addition to this Summit User Guide, there are other sources of documentation, instruction, and tutorials that could be useful for Summit users.

The OLCF Training Archive provides a list of previous training events, including multi-day Summit Workshops. Some examples of topics addressed during these workshops include using Summit’s NVME burst buffers, CUDA-aware MPI, advanced networking and MPI, and multiple ways of programming multiple GPUs per node. You can also find simple tutorials and code examples for some common programming and running tasks in our Github tutorial page .

System Overview

Summit is an IBM system located at the Oak Ridge Leadership Computing Facility. With a theoretical peak double-precision performance of approximately 200 PF, it is one of the most capable systems in the world for a wide range of traditional computational science applications. It is also one of the “smartest” computers in the world for deep learning applications with a mixed-precision capability in excess of 3 EF.

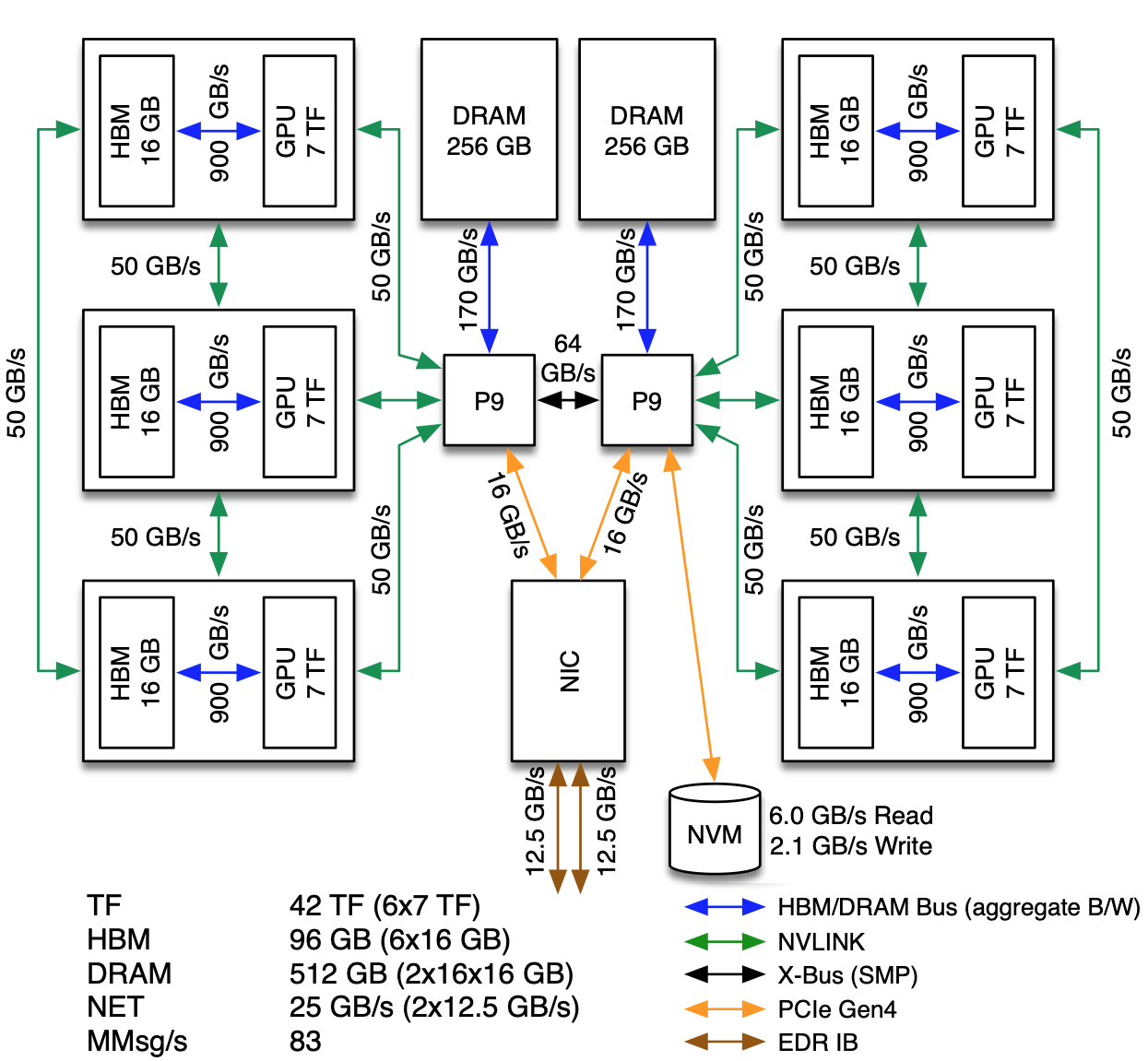

Summit Nodes

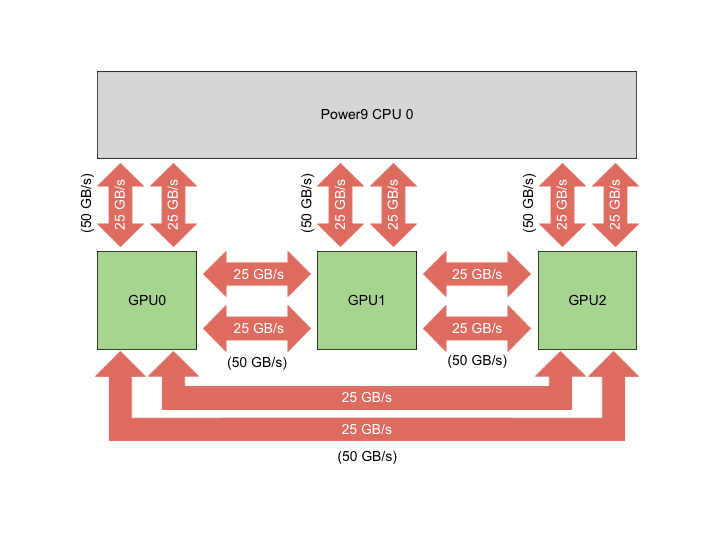

The basic building block of Summit is the IBM Power System AC922 node. Each of the approximately 4,600 compute nodes on Summit contains two IBM POWER9 processors and six NVIDIA Tesla V100 accelerators and provides a theoretical double-precision capability of approximately 40 TF. Each POWER9 processor is connected via dual NVLINK bricks, each capable of a 25GB/s transfer rate in each direction.

Most Summit nodes contain 512 GB of DDR4 memory for use by the POWER9 processors, 96 GB of High Bandwidth Memory (HBM2) for use by the accelerators, and 1.6TB of non-volatile memory that can be used as a burst buffer. A small number of nodes (54) are configured as “high memory” nodes. These nodes contain 2TB of DDR4 memory, 192GB of HBM2, and 6.4TB of non-volatile memory.

The POWER9 processor is built around IBM’s SIMD Multi-Core (SMC). The processor provides 22 SMCs with separate 32kB L1 data and instruction caches. Pairs of SMCs share a 512kB L2 cache and a 10MB L3 cache. SMCs support Simultaneous Multi-Threading (SMT) up to a level of 4, meaning each physical core supports up to 4 Hardware Threads.

The POWER9 processors and V100 accelerators are cooled with cold plate technology. The remaining components are cooled through more traditional methods, although exhaust is passed through a back-of-cabinet heat exchanger prior to being released back into the room. Both the cold plate and heat exchanger operate using medium temperature water which is more cost-effective for the center to maintain than chilled water used by older systems.

Node Types

On Summit, there are three major types of nodes you will encounter: Login, Launch, and Compute. While all of these are similar in terms of hardware (see: Summit Nodes), they differ considerably in their intended use.

Node Type |

Description |

|---|---|

Login |

When you connect to Summit, you’re placed on a login node. This is the place to write/edit/compile your code, manage data, submit jobs, etc. You should never launch parallel jobs from a login node nor should you run threaded jobs on a login node. Login nodes are shared resources that are in use by many users simultaneously. |

Launch |

When your batch script (or interactive batch job) starts running, it will execute on a Launch Node. (If you were a user of Titan, these are similar in function to service nodes on that system). All commands within your job script (or the commands you run in an interactive job) will run on a launch node. Like login nodes, these are shared resources so you should not run multiprocessor/threaded programs on Launch nodes. It is appropriate to launch the jsrun command from launch nodes. |

Compute |

Most of the nodes on Summit are compute nodes. These are where your parallel job executes. They’re accessed via the jsrun command. |

Although the nodes are logically organized into different types, they

all contain similar hardware. As a result of this homogeneous

architecture there is not a need to cross-compile when building on a

login node. Since login nodes have similar hardware resources as compute

nodes, any tests that are run by your build process (especially by

utilities such as autoconf and cmake) will have access to the

same type of hardware that is on compute nodes and should not require

intervention that might be required on non-homogeneous systems.

Note

Login nodes have (2) 16-core Power9 CPUs and (4) V100 GPUs. Compute nodes have (2) 22-core Power9 CPUs and (6) V100 GPUs.

System Interconnect

Summit nodes are connected to a dual-rail EDR InfiniBand network providing a node injection bandwidth of 23 GB/s. Nodes are interconnected in a Non-blocking Fat Tree topology. This interconnect is a three-level tree implemented by a switch to connect nodes within each cabinet (first level) along with Director switches (second and third level) that connect cabinets together.

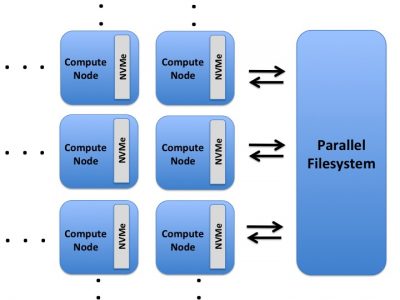

File Systems

Summit is connected to an IBM Spectrum Scale™ filesystem named Alpine2. Summit also has access to the center-wide NFS-based filesystem (which provides user and project home areas) and has access to the center’s Nearline archival storage system (Kronos) for user and project archival storage.

Operating System

Summit is running Red Hat Enterprise Linux (RHEL) version 8.2.

Hardware Threads

The IBM POWER9 processor supports Hardware Threads. Each of the POWER9’s physical cores has 4 “slices”. These slices provide Simultaneous Multi Threading (SMT) support within the core. Three SMT modes are supported: SMT4, SMT2, and SMT1. In SMT4 mode, each of the slices operates independently of the other three. This would permit four separate streams of execution (i.e. OpenMP threads or MPI tasks) on each physical core. In SMT2 mode, pairs of slices work together to run tasks. Finally, in SMT1 mode the four slices work together to execute the task/thread assigned to the physical core. Regardless of the SMT mode used, the four slices share the physical core’s L1 instruction & data caches. https://vimeo.com/283756938

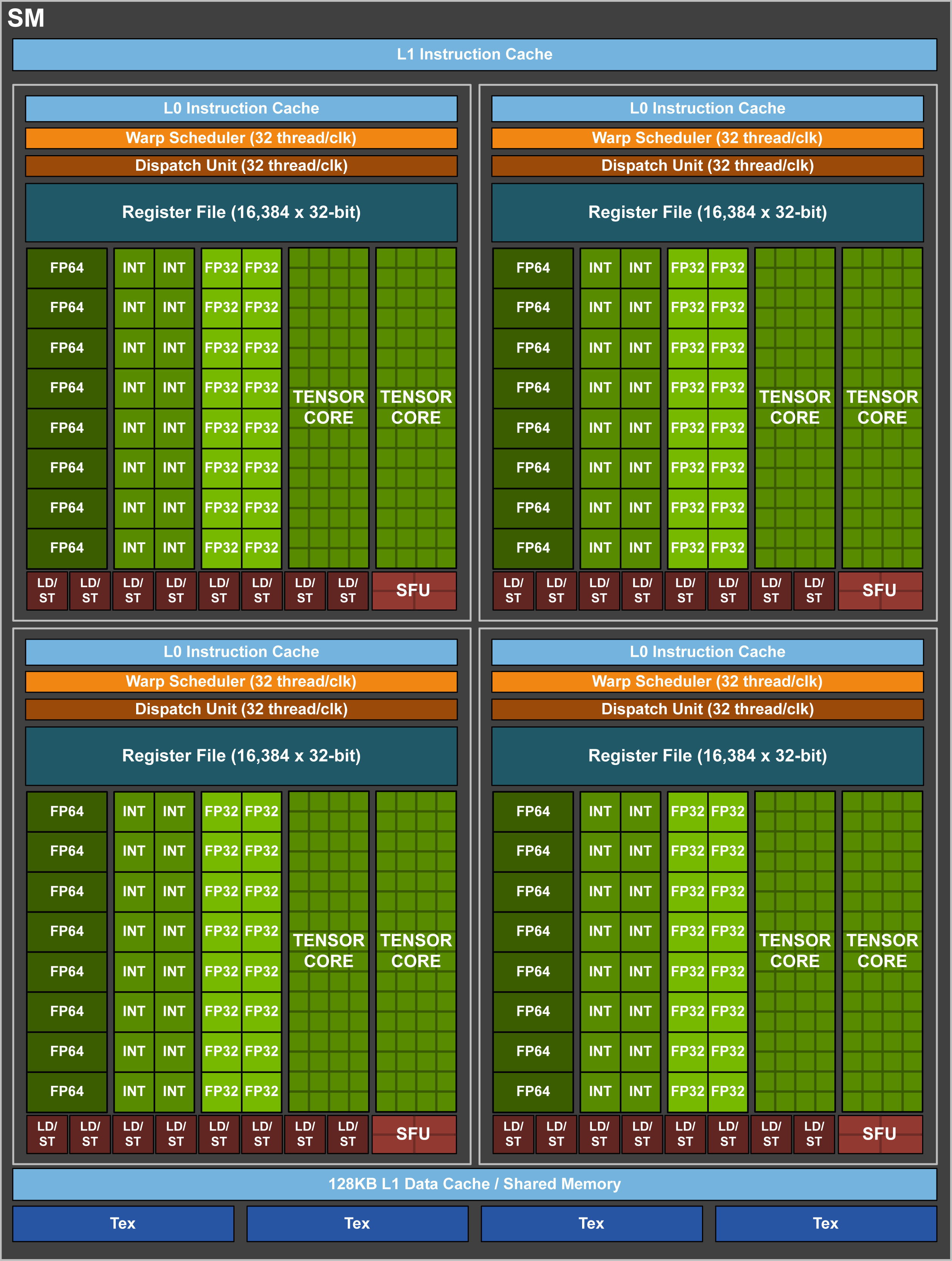

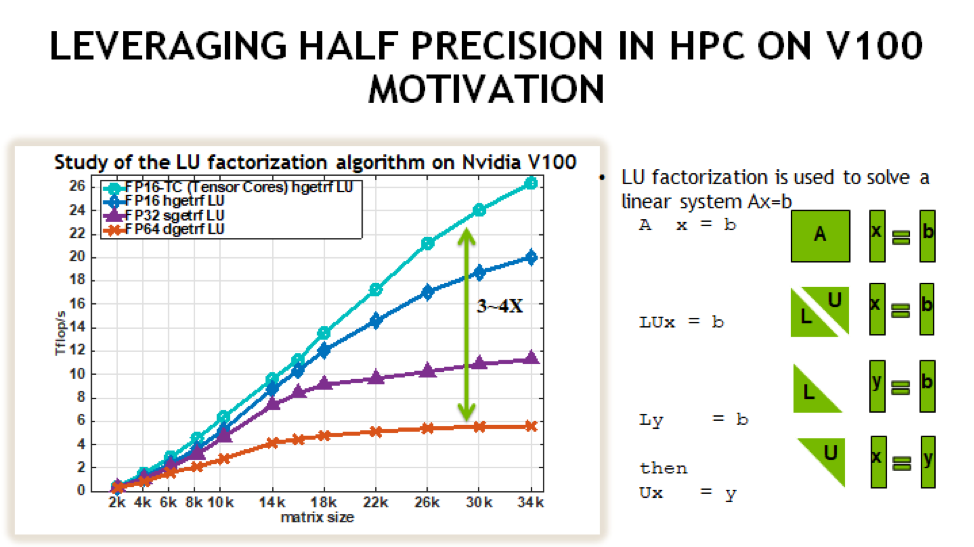

GPUs

Each Summit Compute node has 6 NVIDIA V100 GPUs. The NVIDIA Tesla V100 accelerator has a peak performance of 7.8 TFLOP/s (double-precision) and contributes to a majority of the computational work performed on Summit. Each V100 contains 80 streaming multiprocessors (SMs), 16 GB (32 GB on high-memory nodes) of high-bandwidth memory (HBM2), and a 6 MB L2 cache that is available to the SMs. The GigaThread Engine is responsible for distributing work among the SMs and (8) 512-bit memory controllers control access to the 16 GB (32 GB on high-memory nodes) of HBM2 memory. The V100 uses NVIDIA’s NVLink interconnect to pass data between GPUs as well as from CPU-to-GPU. We provide a more in-depth look into the NVIDIA Tesla V100 later in the Summit Guide.

Connecting

To connect to Summit, ssh to summit.olcf.ornl.gov. For example:

ssh username@summit.olcf.ornl.gov

For more information on connecting to OLCF resources, see Connecting for the first time.

Data and Storage

For more information about center-wide file systems and data archiving available on Summit, please refer to the pages on Data Storage and Transfers.

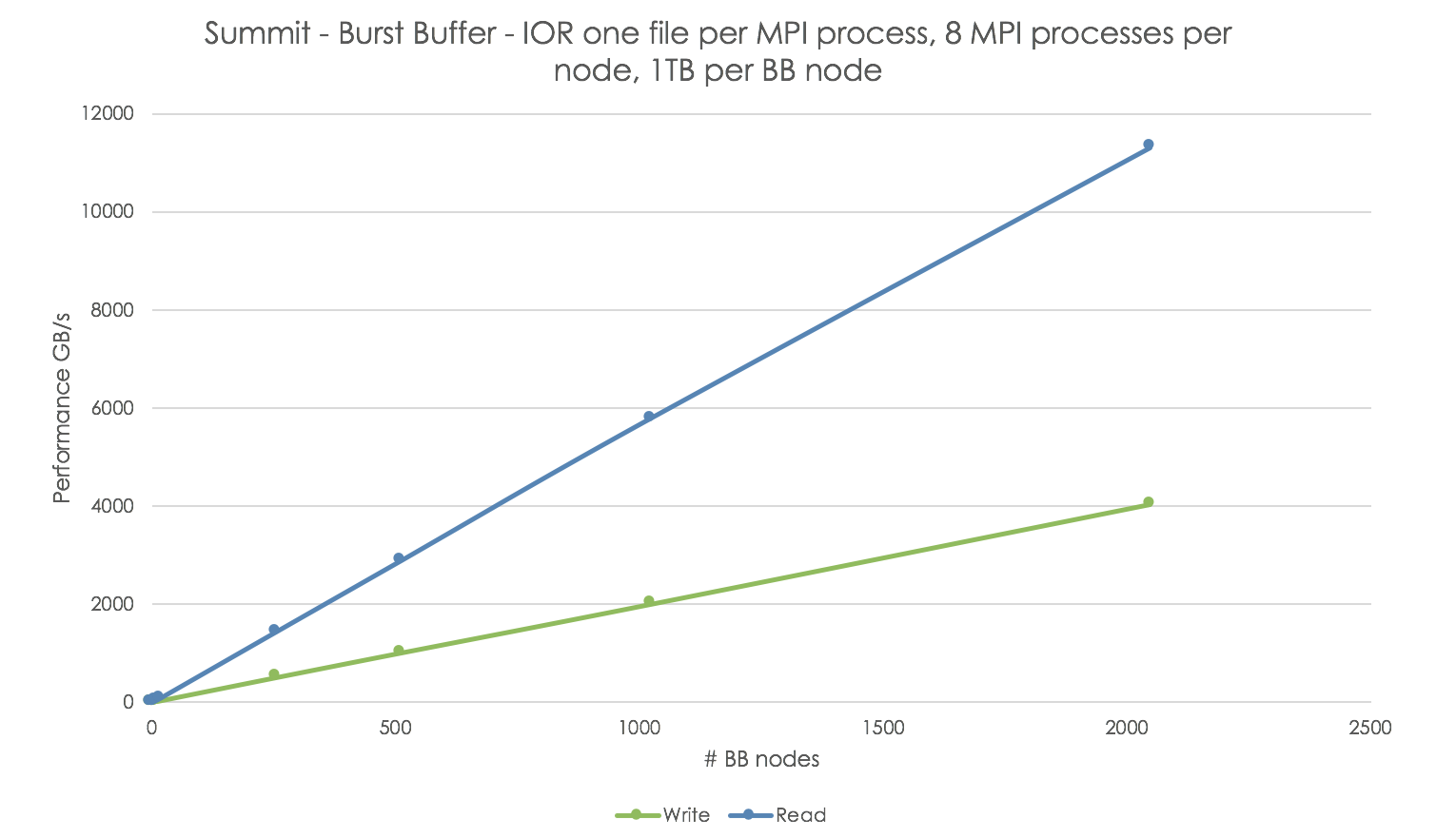

Each compute node on Summit has a 1.6TB Non-Volatile Memory (NVMe) storage device (high-memory nodes have a 6.4TB NVMe storage device), colloquially known as a “Burst Buffer” with theoretical performance peak of 2.1 GB/s for writing and 5.5 GB/s for reading. The NVMes could be used to reduce the time that applications wait for I/O. More information can be found later in the Burst Buffer section.

Software

Visualization and analysis tasks should be done on the Andes cluster. There are a few tools provided for various visualization tasks, as described in the Visualization tools section of the Andes User Guide.

For a full list of software available at the OLCF, please see the Software section (coming soon).

Shell & Programming Environments

OLCF systems provide many software packages and scientific libraries pre-installed at the system-level for users to take advantage of. To facilitate this, environment management tools are employed to handle necessary changes to the shell. The sections below provide information about using these management tools on Summit.

Default Shell

A user’s default shell is selected when completing the User Account Request form. The chosen shell is set across all OLCF resources, and is the shell interface a user will be presented with upon login to any OLCF system. Currently, supported shells include:

bash

tcsh

csh

ksh

If you would like to have your default shell changed, please contact the OLCF User Assistance Center at help@nccs.gov.

Environment Management with Lmod

Environment modules are provided through Lmod, a Lua-based module system for

dynamically altering shell environments. By managing changes to the shell’s

environment variables (such as PATH, LD_LIBRARY_PATH, and

PKG_CONFIG_PATH), Lmod allows you to alter the software available in your

shell environment without the risk of creating package and version combinations

that cannot coexist in a single environment.

Lmod is a recursive environment module system, meaning it is aware of module compatibility and actively alters the environment to protect against conflicts. Messages to stderr are issued upon Lmod implicitly altering the environment. Environment modules are structured hierarchically by compiler family such that packages built with a given compiler will only be accessible if the compiler family is first present in the environment.

Note

Lmod can interpret both Lua modulefiles and legacy Tcl modulefiles. However, long and logic-heavy Tcl modulefiles may require porting to Lua.

General Usage

Typical use of Lmod is very similar to that of interacting with

modulefiles on other OLCF systems. The interface to Lmod is provided by

the module command:

Command |

Description |

|---|---|

module -t list |

Shows a terse list of the currently loaded modules. |

module avail |

Shows a table of the currently available modules |

module help <modulename> |

Shows help information about <modulename> |

module show <modulename> |

Shows the environment changes made by the <modulename> modulefile |

module spider <string> |

Searches all possible modules according to <string> |

module load <modulename> […] |

Loads the given <modulename>(s) into the current environment |

module use <path> |

Adds <path> to the modulefile search cache and |

module unuse <path> |

Removes <path> from the modulefile search cache and |

module purge |

Unloads all modules |

module reset |

Resets loaded modules to system defaults |

module update |

Reloads all currently loaded modules |

Note

Modules are changed recursively. Some commands, such as

module swap, are available to maintain compatibility with scripts

using Tcl Environment Modules, but are not necessary since Lmod

recursively processes loaded modules and automatically resolves

conflicts.

Searching for modules

Modules with dependencies are only available when the underlying dependencies,

such as compiler families, are loaded. Thus, module avail will only display

modules that are compatible with the current state of the environment. To search

the entire hierarchy across all possible dependencies, the spider

sub-command can be used as summarized in the following table.

Command |

Description |

|---|---|

module spider |

Shows the entire possible graph of modules |

module spider <modulename> |

Searches for modules named <modulename> in the graph of possible modules |

module spider <modulename>/<version> |

Searches for a specific version of <modulename> in the graph of possible modules |

module spider <string> |

Searches for modulefiles containing <string> |

Defining custom module collections

Lmod supports caching commonly used collections of environment modules on a

per-user basis in $HOME/.lmod.d. To create a collection called “NAME” from

the currently loaded modules, simply call module save NAME. Omitting “NAME”

will set the user’s default collection. Saved collections can be recalled and

examined with the commands summarized in the following table.

Command |

Description |

|---|---|

module restore NAME |

Recalls a specific saved user collection titled “NAME” |

module restore |

Recalls the user-defined defaults |

module reset |

Resets loaded modules to system defaults |

module restore system |

Recalls the system defaults |

module savelist |

Shows the list user-defined saved collections |

Note

You should use unique names when creating collections to

specify the application (and possibly branch) you are working on. For

example, app1-development, app1-production, and

app2-production.

Note

In order to avoid conflicts between user-defined collections

on multiple compute systems that share a home file system (e.g.

/ccs/home/[userid]), lmod appends the hostname of each system to the

files saved in in your ~/.lmod.d directory (using the environment

variable LMOD_SYSTEM_NAME). This ensures that only collections

appended with the name of the current system are visible.

The following screencast shows an example of setting up user-defined module collections on Summit. https://vimeo.com/293582400

Compiling

Compilers

Available Compilers

The following compilers are available on Summit:

XL: IBM XL Compilers (loaded by default)

LLVM: LLVM compiler infrastructure

PGI: Portland Group compiler suite

NVHPC: Nvidia HPC SDK compiler suite

GNU: GNU Compiler Collection

NVCC: CUDA C compiler

PGI was bought out by Nvidia and have rebranded their compilers, incorporating them into the NVHPC compiler suite. There will be no more new releases of the PGI compilers.

Upon login, the default versions of the XL compiler suite and Spectrum Message Passing Interface (MPI) are added to each user’s environment through the modules system. No changes to the environment are needed to make use of the defaults.

Multiple versions of each compiler family are provided, and can be inspected using the modules system:

summit$ module -t avail gcc

/sw/summit/spack-envs/base/modules/site/Core:

gcc/7.5.0

gcc/9.1.0

gcc/9.3.0

gcc/10.2.0

gcc/11.1.0

C compilation

Note

type char is unsigned by default

Vendor |

Module |

Compiler |

Enable C99 |

Enable C11 |

Default signed char |

Define macro |

|---|---|---|---|---|---|---|

IBM |

|

xlc xlc_r |

|

|

|

|

GNU |

system default |

gcc |

|

|

|

|

GNU |

|

gcc |

|

|

|

|

LLVM |

|

clang |

default |

|

|

|

PGI |

|

pgcc |

|

|

|

|

NVHPC |

|

nvc |

|

|

|

|

C++ compilations

Note

type char is unsigned by default

Vendor |

Module |

Compiler |

Enable C++11 |

Enable C++14 |

Default signed char |

Define macro |

|---|---|---|---|---|---|---|

IBM |

|

xlc++, xlc++_r |

|

|

|

|

GNU |

system default |

g++ |

|

|

|

|

GNU |

|

g++ |

|

|

|

|

LLVM |

|

clang++ |

|

|

|

|

PGI |

|

pgc++ |

|

|

|

|

NVHPC |

|

nvc++ |

|

|

|

|

Fortran compilation

Vendor |

Module |

Compiler |

Enable F90 |

Enable F2003 |

Enable F2008 |

Define macro |

|---|---|---|---|---|---|---|

IBM |

|

xlf xlf90 xlf95 xlf2003 xlf2008 |

|

|

|

|

GNU |

system default |

gfortran |

|

|

|

|

LLVM |

|

xlflang |

n/a |

n/a |

n/a |

|

PGI |

|

pgfortran |

use |

use |

use |

|

NVHPC |

|

nvfortran |

use |

use |

use |

|

Note

The xlflang module currently conflicts with the clang module. This restriction is expected to be lifted in future releases.

MPI

MPI on Summit is provided by IBM Spectrum MPI. Spectrum MPI provides compiler wrappers that automatically choose the proper compiler to build your application.

The following compiler wrappers are available:

C: mpicc

C++: mpic++, mpiCC

Fortran: mpifort, mpif77, mpif90

While these wrappers conveniently abstract away linking of Spectrum MPI, it’s

sometimes helpful to see exactly what’s happening when invoked. The --showme

flag will display the full link lines, without actually compiling:

summit$ mpicc --showme

/sw/summit/xl/16.1.1-10/xlC/16.1.1/bin/xlc_r -I/sw/summit/spack-envs/base/opt/linux-rhel8-ppc64le/xl-16.1.1-10/spectrum-mpi-10.4.0.3-20210112-v7qymniwgi6mtxqsjd7p5jxinxzdkhn3/include -pthread -L/sw/summit/spack-envs/base/opt/linux-rhel8-ppc64le/xl-16.1.1-10/spectrum-mpi-10.4.0.3-20210112-v7qymniwgi6mtxqsjd7p5jxinxzdkhn3/lib -lmpiprofilesupport -lmpi_ibm

OpenMP

Note

When using OpenMP with IBM XL compilers, the thread-safe

compiler variant is required; These variants have the same name as the

non-thread-safe compilers with an additional _r suffix. e.g. to

compile OpenMPI C code one would use xlc_r

Note

OpenMP offloading support is still under active development. Performance and debugging capabilities in particular are expected to improve as the implementations mature.

Vendor |

3.1 Support |

Enable OpenMP |

4.x Support |

Enable OpenMP 4.x Offload |

|---|---|---|---|---|

IBM |

FULL |

|

FULL |

|

GNU |

FULL |

|

PARTIAL |

|

clang |

FULL |

|

PARTIAL |

|

xlflang |

FULL |

|

PARTIAL |

|

PGI |

FULL |

|

NONE |

NONE |

NVHPC |

FULL |

|

NONE |

NONE |

OpenACC

Vendor |

Module |

OpenACC Support |

Enable OpenACC |

|---|---|---|---|

IBM |

|

NONE |

NONE |

GNU |

system default |

NONE |

NONE |

GNU |

|

2.5 |

|

LLVM |

|

NONE |

NONE |

PGI |

|

2.5 |

|

NVHPC |

|

2.5 |

|

CUDA compilation

NVIDIA

CUDA C/C++ support is provided through the cuda module or throught the nvhpc module.

nvcc : Primary CUDA C/C++ compiler

Language support

-std=c++11 : provide C++11 support

--expt-extended-lambda : provide experimental host/device lambda support

--expt-relaxed-constexpr : provide experimental host/device constexpr support

Compiler support

NVCC currently supports XL, GCC, and PGI C++ backends.

--ccbin : set to host compiler location

CUDA Fortran compilation

IBM

The IBM compiler suite is made available through the default loaded xl module, the cuda module is also required.

xlcuf : primary Cuda fortran compiler, thread safe

Language support flags

-qlanglvl=90std : provide Fortran90 support

-qlanglvl=95std : provide Fortran95 support

-qlanglvl=2003std : provide Fortran2003 support

-qlanglvl=2008std : provide Fortran2003 support

PGI

The PGI compiler suite is available through the pgi module.

pgfortran : Primary fortran compiler with CUDA Fortran support

Language support:

Files with .cuf suffix automatically compiled with cuda fortran support

Standard fortran suffixed source files determines the standard involved, see the man page for full details

-Mcuda : Enable CUDA Fortran on provided source file

Linking in Libraries

OLCF systems provide many software packages and scientific

libraries pre-installed at the system-level for users to take advantage

of. In order to link these libraries into an application, users must

direct the compiler to their location. The module show command can

be used to determine the location of a particular library. For example

summit$ module show essl

------------------------------------------------------------------------------------

/sw/summit/modulefiles/core/essl/6.1.0-1:

------------------------------------------------------------------------------------

whatis("ESSL 6.1.0-1 ")

prepend_path("LD_LIBRARY_PATH","/sw/summit/essl/6.1.0-1/essl/6.1/lib64")

append_path("LD_LIBRARY_PATH","/sw/summit/xl/16.1.1-beta4/lib")

prepend_path("MANPATH","/sw/summit/essl/6.1.0-1/essl/6.1/man")

setenv("OLCF_ESSL_ROOT","/sw/summit/essl/6.1.0-1/essl/6.1")

help([[ESSL 6.1.0-1

]])

When this module is loaded, the $OLCF_ESSL_ROOT environment variable

holds the path to the ESSL installation, which contains the lib64/ and

include/ directories:

summit$ module load essl

summit$ echo $OLCF_ESSL_ROOT

/sw/summit/essl/6.1.0-1/essl/6.1

summit$ ls $OLCF_ESSL_ROOT

FFTW3 READMES REDIST.txt include iso-swid ivps lap lib64 man msg

The following screencast shows an example of linking two libraries into a simple program on Summit. https://vimeo.com/292015868

Running Jobs

As is the case on other OLCF systems, computational work on Summit is performed within jobs. A typical job consists of several components:

A submission script

An executable

Input files needed by the executable

Output files created by the executable

In general, the process for running a job is to:

Prepare executables and input files

Write the batch script

Submit the batch script

Monitor the job’s progress before and during execution

The following sections will provide more information regarding running jobs on Summit. Summit uses IBM Spectrum Load Sharing Facility (LSF) as the batch scheduling system.

Login, Launch, and Compute Nodes

Recall from the System Overview

section that Summit has three types of nodes: login, launch, and

compute. When you log into the system, you are placed on a login node.

When your Batch Scripts or Interactive Jobs run,

the resulting shell will run on a launch node. Compute nodes are accessed

via the jsrun command. The jsrun command should only be issued

from within an LSF job (either batch or interactive) on a launch node.

Otherwise, you will not have any compute nodes allocated and your parallel

job will run on the login node. If this happens, your job will interfere with

(and be interfered with by) other users’ login node tasks. jsrun is covered

in-depth in the Job Launcher (jsrun) section.

Per-User Login Node Resource Limits

Because the login nodes are resources shared by all Summit users, we utilize

cgroups to help better ensure resource availability for all users of the

shared nodes. By default each user is limited to 16 hardware-threads, 16GB

of memory, and 1 GPU. Please note that limits are set per user and not

individual login sessions. All user processes on a node are contained within a

single cgroup and share the cgroup’s limits.

If a process from any of a user’s login sessions reaches 4 hours of CPU-time, all login sessions will be limited to .5 hardware-thread. After 8 hours of CPU-time, the process is automatically killed. To reset the cgroup limits on a node to default once the 4 hour CPU-time reduction has been reached, kill the offending process and start a new login session to the node.

Users can run command check_cgroup_user on login nodes to check what processes

were recently killed by cgroup limits.

Note

Login node limits are set per user and not per individual login session. All user processes on a node are contained within a single cgroup and will share the cgroup’s limits.

Batch Scripts

The most common way to interact with the batch system is via batch jobs. A batch job is simply a shell script with added directives to request various resources from or provide certain information to the batch scheduling system. Aside from the lines containing LSF options, the batch script is simply the series commands needed to set up and run your job.

To submit a batch script, use the bsub command: bsub myjob.lsf

If you’ve previously used LSF, you’re probably used to submitting a job

with input redirection (i.e. bsub < myjob.lsf). This is not needed

(and will not work) on Summit.

As an example, consider the following batch script:

1#!/bin/bash

2# Begin LSF Directives

3#BSUB -P ABC123

4#BSUB -W 3:00

5#BSUB -nnodes 2048

6#BSUB -alloc_flags gpumps

7#BSUB -J RunSim123

8#BSUB -o RunSim123.%J

9#BSUB -e RunSim123.%J

10

11cd $MEMBERWORK/abc123

12cp $PROJWORK/abc123/RunData/Input.123 ./Input.123

13date

14jsrun -n 4092 -r 2 -a 12 -g 3 ./a.out

15cp my_output_file /ccs/proj/abc123/Output.123

Note

For Moderate Enhanced Projects, job scripts need to add “-l” (“ell”) to the shell specification, similar to interactive usage.

Line # |

Option |

Description |

|---|---|---|

1 |

Shell specification. This script will run under with bash as the shell. Moderate enhanced

projects should add |

|

2 |

Comment line |

|

3 |

Required |

This job will charge to the ABC123 project |

4 |

Required |

Maximum walltime for the job is 3 hours |

5 |

Required |

The job will use 2,048 compute nodes |

6 |

Optional |

Enable GPU Multi-Process Service |

7 |

Optional |

The name of the job is RunSim123 |

8 |

Optional |

Write standard output to a file named RunSim123.#, where # is the job ID assigned by LSF |

9 |

Optional |

Write standard error to a file named RunSim123.#, where # is the job ID assigned by LSF |

10 |

Blank line |

|

11 |

Change into one of the scratch filesystems |

|

12 |

Copy input files into place |

|

13 |

Run the |

|

14 |

Run the executable on the allocated compute nodes |

|

15 |

Copy output files from the scratch area into a more permanent location |

Interactive Jobs

Most users will find batch jobs to be the easiest way to interact with the system, since they permit you to hand off a job to the scheduler and then work on other tasks; however, it is sometimes preferable to run interactively on the system. This is especially true when developing, modifying, or debugging a code.

Since all compute resources are managed/scheduled by LSF, it is not possible

to simply log into the system and begin running a parallel code interactively.

You must request the appropriate resources from the system and, if necessary,

wait until they are available. This is done with an “interactive batch” job.

Interactive batch jobs are submitted via the command line, which

supports the same options that are passed via #BSUB parameters in a

batch script. The final options on the command line are what makes the

job “interactive batch”: -Is followed by a shell name. For example,

to request an interactive batch job (with bash as the shell) equivalent

to the sample batch script above, you would use the command:

bsub -W 3:00 -nnodes 2048 -P ABC123 -Is /bin/bash

As pointed out in Login, Launch, and Compute Nodes, you will be placed on

a launch (a.k.a. “batch”) node upon launching an interactive job and as usual

need to use jsrun to access the compute node(s):

$ bsub -Is -W 0:10 -nnodes 1 -P STF007 $SHELL

Job <779469> is submitted to default queue <batch>.

<<Waiting for dispatch ...>>

<<Starting on batch2>>

$ hostname

batch2

$ jsrun -n1 hostname

a35n03

Common bsub Options

The table below summarizes options for submitted jobs. Unless otherwise

noted, these can be used from batch scripts or interactive jobs. For

interactive jobs, the options are simply added to the bsub command

line. For batch scripts, they can either be added on the bsub

command line or they can appear as a #BSUB directive in the batch

script. If conflicting options are specified (i.e. different walltime

specified on the command line versus in the script), the option on the

command line takes precedence. Note that LSF has numerous options; only

the most common ones are described here. For more in-depth information

about other LSF options, see the bsub man page.

Option |

Example Usage |

Description |

|---|---|---|

|

|

Requested maximum walltime. NOTE: The format is [hours:]minutes, not [[hours:]minutes:]seconds like PBS/Torque/Moab |

|

|

Number of nodes NOTE: There is specified with only one hyphen (i.e. -nnodes, not –nnodes) |

|

|

Specifies the project to which the job should be charged |

|

|

File into which

job STDOUT should be directed (%J will be replaced with the job ID number) If

you do not also specify a STDERR file with |

|

|

File into which job STDERR should be directed (%J will be replaced with the job ID number) |

|

|

Specifies the name of the job (if not present, LSF will use the name of the job script as the job’s name) |

|

|

Place a dependency on the job |

|

|

Send a job report via email when the job completes |

|

|

Use X11 forwarding |

|

|

Used to request GPU Multi-Process Service (MPS) and to set SMT (Simultaneous Multithreading) levels. Only one “#BSUB alloc_flags” command is recognized so multiple alloc_flags options need to be enclosed in quotes and space-separated. Setting gpumps enables NVIDIA’s Multi-Process Service, which allows multiple MPI ranks to simultaneously access a GPU. Setting smtn (where n is 1, 2, or 4) sets different SMT levels. To run with 2 hardware threads per physical core, you’d use smt2. The default level is smt4. |

Allocation-wide Options

The -alloc_flags option to bsub is used to set allocation-wide options.

These settings are applied to every compute node in a job. Only one instance of

the flag is accepted, and multiple alloc_flags values should be enclosed in

quotes and space-separated. For example, -alloc_flags "gpumps smt1.

The most common values (smt{1,2,4}, gpumps, gpudefault) are detailed in

the following sections.

This option can also be used to provide additional resources to GPFS service processes, described in the GPFS System Service Isolation section.

Hardware Threads

Hardware threads are a feature of the POWER9 processor through which

individual physical cores can support multiple execution streams,

essentially looking like one or more virtual cores (similar to

hyperthreading on some Intel® microprocessors). This feature is often

called Simultaneous Multithreading or SMT. The POWER9 processor on

Summit supports SMT levels of 1, 2, or 4, meaning (respectively) each

physical core looks like 1, 2, or 4 virtual cores. The SMT level is

controlled by the -alloc_flags option to bsub. For example, to

set the SMT level to 2, add the line #BSUB –alloc_flags smt2 to your

batch script or add the option -alloc_flags smt2 to you bsub

command line.

The default SMT level is 4.

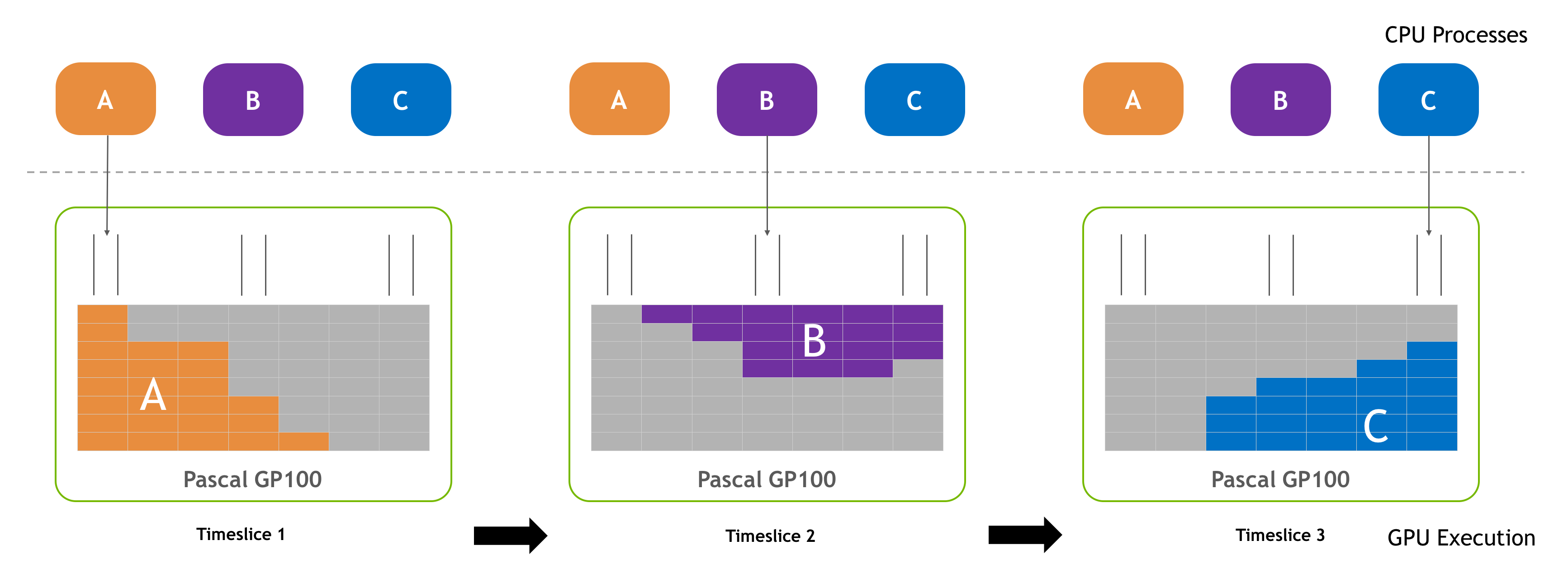

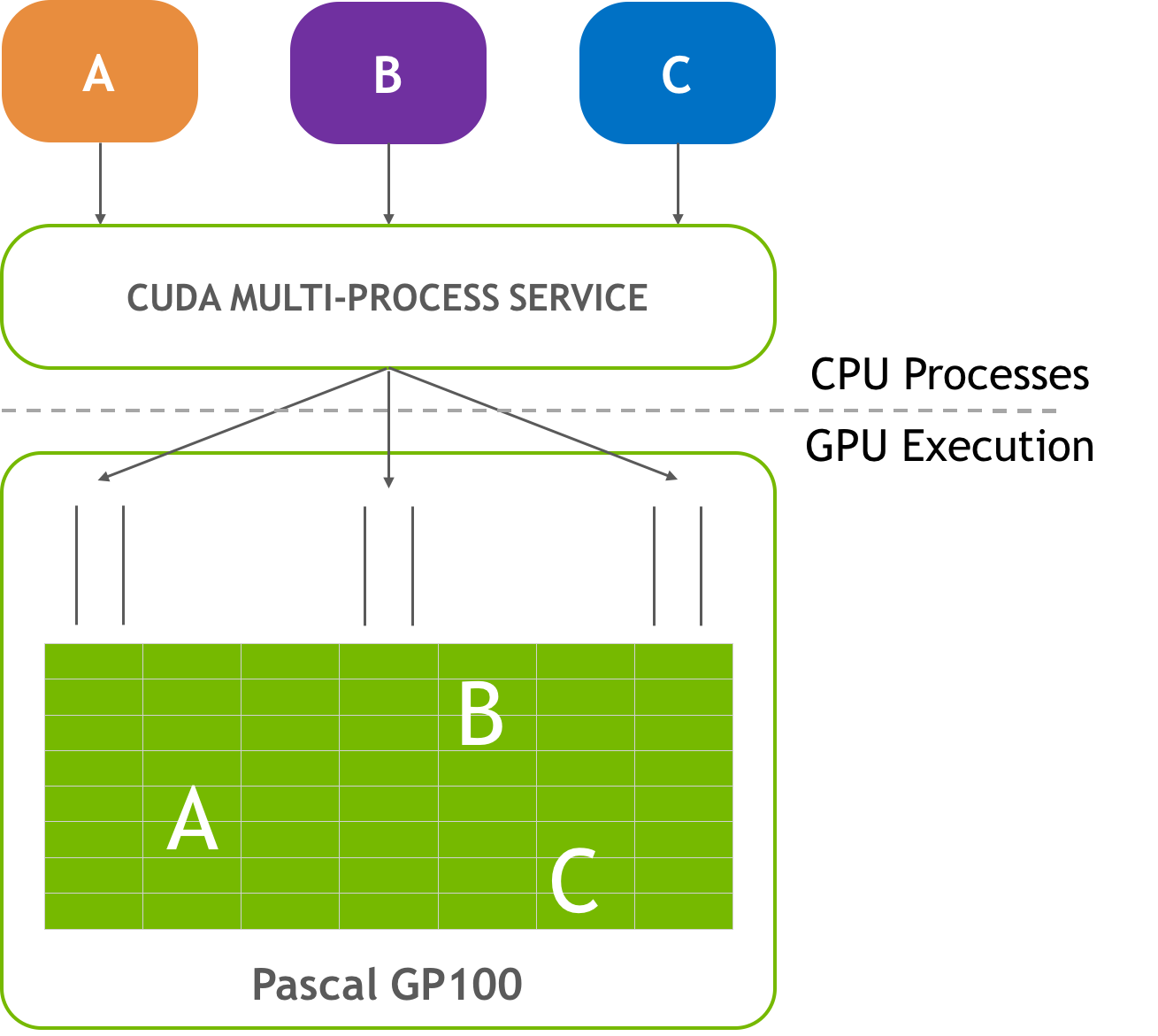

MPS

The Multi-Process Service (MPS) enables multiple processes (e.g. MPI

ranks) to concurrently share the resources on a single GPU. This is

accomplished by starting an MPS server process, which funnels the work

from multiple CUDA contexts (e.g. from multiple MPI ranks) into a single

CUDA context. In some cases, this can increase performance due to better

utilization of the resources. As mentioned in the Common bsub Options

section above, MPS can be enabled with the -alloc_flags "gpumps" option to

bsub. The following screencast shows an example of how to start an MPS

server process for a job: https://vimeo.com/292016149

GPU Compute Modes

Summit’s V100 GPUs are configured to have a default compute mode of

EXCLUSIVE_PROCESS. In this mode, the GPU is assigned to only a single

process at a time, and can accept work from multiple process threads

concurrently.

It may be desirable to change the GPU’s compute mode to DEFAULT, which

enables multiple processes and their threads to share and submit work to it

simultaneously. To change the compute mode to DEFAULT, use the

-alloc_flags gpudefault option.

NVIDIA recommends using the EXCLUSIVE_PROCESS compute mode (the default on

Summit) when using the Multi-Process Service, but both MPS and the compute mode

can be changed by providing both values: -alloc_flags "gpumps gpudefault".

Batch Environment Variables

LSF provides a number of environment variables in your job’s shell

environment. Many job parameters are stored in environment variables and

can be queried within the batch job. Several of these variables are

summarized in the table below. This is not an all-inclusive list of

variables available to your batch job; in particular only LSF variables

are discussed, not the many “standard” environment variables that will

be available (such as $PATH).

Variable |

Description |

|---|---|

|

The ID assigned to the job by LSF |

|

The job’s process ID |

|

The job’s index (if it belongs to a job array) |

|

The hosts assigned to run the job |

|

The queue from which the job was dispatched |

|

Set to “Y” for an interactive job; otherwise unset |

|

The directory from which the job was submitted |

Job States

A job will progress through a number of states through its lifetime. The states you’re most likely to see are:

State |

Description |

|---|---|

PEND |

Job is pending |

RUN |

Job is running |

DONE |

Job completed normally (with an exit code of 0) |

EXIT |

Job completed abnormally |

PSUSP |

Job was suspended (either by the user or an administrator) while pending |

USUSP |

Job was suspended (either by the user or an administrator) after starting |

SSUSP |

Job was suspended by the system after starting |

Note

Jobs may end up in the PSUSP state for a number of reasons. Two common reasons for PSUSP jobs include jobs that have been held by the user or jobs with unresolved dependencies.

Another common reason that jobs end up in a PSUSP state is a job that the system is unable to start. You may notice a job alternating between PEND and RUN states a few times and ultimately ends up as PSUSP. In this case, the system attempted to start the job but failed for some reason. This can be due to a system issue, but we have also seen this casued by improper settings on user ~/.ssh/config files. (The batch system uses SSH, and the improper settings cause SSH to fail.) If you notice your jobs alternating between PEND and RUN, you might want to check permissions of your ~/.ssh/config file to make sure it does not have write permission for “group” or “other”. (A setting of read/write for the user and no other permissions, which can be set with chmod 600 ~/.ssh/config, is recommended.)

Scheduling Policy

In a simple batch queue system, jobs run in a first-in, first-out (FIFO) order. This often does not make effective use of the system. A large job may be next in line to run. If the system is using a strict FIFO queue, many processors sit idle while the large job waits to run. Backfilling would allow smaller, shorter jobs to use those otherwise idle resources, and with the proper algorithm, the start time of the large job would not be delayed. While this does make more effective use of the system, it indirectly encourages the submission of smaller jobs.

The DOE Leadership-Class Job Mandate

As a DOE Leadership Computing Facility, the OLCF has a mandate that a large portion of Summit’s usage come from large, leadership-class (aka capability) jobs. To ensure the OLCF complies with DOE directives, we strongly encourage users to run jobs on Summit that are as large as their code will warrant. To that end, the OLCF implements queue policies that enable large jobs to run in a timely fashion.

Note

The OLCF implements queue policies that encourage the submission and timely execution of large, leadership-class jobs on Summit.

The basic priority-setting mechanism for jobs waiting in the queue is the time a job has been waiting relative to other jobs in the queue.

If your jobs require resources outside these queue policies such as higher priority or longer walltimes, please contact help@olcf.ornl.gov.

Job Priority by Processor Count

Jobs are aged according to the job’s requested processor count (older age equals higher queue priority). Each job’s requested processor count places it into a specific bin. Each bin has a different aging parameter, which all jobs in the bin receive.

Bin |

Min Nodes |

Max Nodes |

Max Walltime (Hours) |

Aging Boost (Days) |

|---|---|---|---|---|

1 |

2,765 |

4,608 |

24.0 |

15 |

2 |

922 |

2,764 |

24.0 |

10 |

3 |

92 |

921 |

12.0 |

0 |

4 |

46 |

91 |

6.0 |

0 |

5 |

1 |

45 |

2.0 |

0 |

batch Queue Policy

The batch queue (and the batch-spi queue for Moderate Enhanced security

enclave projects) is the default queue for production work on Summit. Most

work on Summit is handled through this queue. It enforces the following

policies:

Limit of (4) eligible-to-run jobs per user.

Jobs in excess of the per user limit above will be placed into a held state, but will change to eligible-to-run at the appropriate time.

Users may have only (100) jobs queued in the

batchqueue at any state at any time. Additional jobs will be rejected at submit time.

Note

The eligible-to-run state is not the running state. Eligible-to-run jobs have not started and are waiting for resources. Running jobs are actually executing.

batch-hm Queue Policy

The batch-hm queue (and the batch-hm-spi queue for Moderate Enhanced

security enclave projects) is used to access Summit’s high-memory nodes. Jobs

may use all 54 nodes. It enforces the following policies:

Limit of (4) eligible-to-run jobs per user.

Jobs in excess of the per user limit above will be placed into a held state, but will change to eligible-to-run at the appropriate time.

Users may have only (25) jobs queued in the

batch-hmqueue at any state at any time. Additional jobs will be rejected at submit time.

batch-hm job limits:

Min Nodes |

Max Nodes |

Max Walltime (Hours) |

|---|---|---|

1 |

54 |

24.0 |

To submit a job to the batch-hm queue, add the -q batch-hm option to your

bsub command or #BSUB -q batch-hm to your job script.

killable Queue Policy

The killable queue is a preemptable queue that allows jobs in bins 4 and 5

to request walltimes up to 24 hours. Jobs submitted to the killable queue will

be preemptable once the job reaches the guaranteed runtime limit as shown in the

table below. For example, a job in bin 5 submitted to the killable queue can

request a walltime of 24 hours. The job will be preemptable after two hours of

run time. Similarly, a job in bin 4 will be preemptable after six hours of run

time. Once a job is preempted, the job will be resubmitted by default with the

original limits as requested in the job script and will have the same JOBID.

Preemptable job limits:

Bin |

Min Nodes |

Max Nodes |

Max Walltime (Hours) |

Guaranteed Walltime |

|---|---|---|---|---|

4 |

46 |

91 |

24.0 |

6.0 (hours) |

5 |

1 |

45 |

24.0 |

2.0 (hours) |

Warning

If a job in the killable queue does not reach its requested

walltime, it will continue to use allocation time with each automatic

resubmission until it either reaches the requested walltime during a single

continuous run, or is manually killed by the user. Allocations are always

charged based on actual compute time used by all jobs.

To submit a job to the killable queue, add the -q killable option to your

bsub command or #BSUB -q killable to your job script.

To prevent a preempted job from being automatically requeued, the BSUB -rn

flag can be used at submit time.

debug Queue Policy

The debug queue (and the debug-spi queue for Moderate Enhanced security

enclave projects) can be used to access Summit’s compute resources for short

non-production debug tasks. The queue provides a higher priority compared to

jobs of the same job size bin in production queues. Production work and job

chaining in the debug queue is prohibited. Each user is limited to one job in

any state in the debug queue at any one point. Attempts to submit multiple jobs

to the debug queue will be rejected upon job submission.

debug job limits:

Min Nodes |

Max Nodes |

Max Walltime (Hours) |

Max queued any state (per user) |

Aging Boost (Days) |

|---|---|---|---|---|

1 |

unlimited |

2.0 |

1 |

2 |

To submit a job to the debug queue, add the -q debug option to your

bsub command or #BSUB -q debug to your job script.

Note

Production work and job chaining in the debug queue is prohibited.

SPI/KDI Citadel Queue Policy (Moderate Enhanced Projects)

There are special queue names when submitting jobs to citadel.ccs.ornl.gov

(the Moderate Enhanced version of Summit). These queues are: batch-spi,

batch-hm-spi, and debug-spi. For example, to submit a job to the

batch-spi queue on Citadel, you would need -q batch-spi when using the

bsub command or #BSUB -q batch-spi when using a job script.

Except for the enhanced security policies for jobs in these queues, all other queue properties are the same as the respective Summit queues described above, such as maximum walltime and number of eligible running jobs.

Warning

If you submit a job to a “normal” Summit queue while on Citadel, such as

-q batch, your job will be unable to launch.

Allocation Overuse Policy

Projects that overrun their allocation are still allowed to run on OLCF

systems, although at a reduced priority. Like the adjustment for the

number of processors requested above, this is an adjustment to the

apparent submit time of the job. However, this adjustment has the effect

of making jobs appear much younger than jobs submitted under projects

that have not exceeded their allocation. In addition to the priority

change, these jobs are also limited in the amount of wall time that can

be used. For example, consider that job1 is submitted at the same

time as job2. The project associated with job1 is over its

allocation, while the project for job2 is not. The batch system will

consider job2 to have been waiting for a longer time than job1.

Additionally, projects that are at 125% of their allocated time will be

limited to only 3 running jobs at a time. The adjustment to the

apparent submit time depends upon the percentage that the project is

over its allocation, as shown in the table below:

% Of Allocation Used |

Priority Reduction |

|---|---|

< 100% |

0 days |

100% to 125% |

30 days |

> 125% |

365 days |

System Reservation Policy

Projects may request to reserve a set of nodes for a period of time by contacting help@olcf.ornl.gov. If the reservation is granted, the reserved nodes will be blocked from general use for a given period of time. Only users that have been authorized to use the reservation can utilize those resources. To access the reservation, please add -U {reservation name} to bsub or job script. Since no other users can access the reserved resources, it is crucial that groups given reservations take care to ensure the utilization on those resources remains high. To prevent reserved resources from remaining idle for an extended period of time, reservations are monitored for inactivity. If activity falls below 50% of the reserved resources for more than (30) minutes, the reservation will be canceled and the system will be returned to normal scheduling. A new reservation must be requested if this occurs.

The requesting project’s allocation is charged according to the time window granted, regardless of actual utilization. For example, an 8-hour, 2,000 node reservation on Summit would be equivalent to using 16,000 Summit node-hours of a project’s allocation.

Job Dependencies

As is the case with many other queuing systems, it is possible to place dependencies on jobs to prevent them from running until other jobs have started/completed/etc. Several possible dependency settings are described in the table below:

Expression |

Meaning |

|---|---|

|

The job will not start until

job 12345 starts. Job 12345 is considered to have started if is in any of the

following states: USUSP, SSUSP, DONE, EXIT or RUN (with any pre-execution

command specified by |

|

The job will not start until

job 12345 has a state of DONE (i.e. completed normally). If a job ID is given

with no condition, |

|

The job will not start until job 12345 has a state of EXIT (i.e. completed abnormally) |

|

The job will not start until job 12345 has a state of EXIT or DONE |

Dependency expressions can be combined with logical operators. For

example, if you want a job held until job 12345 is DONE and job 12346

has started, you can use #BSUB -w "done(12345) && started(12346)"

Job Launcher (jsrun)

The default job launcher for Summit is jsrun. jsrun was developed by

IBM for the Oak Ridge and Livermore Power systems. The tool will execute

a given program on resources allocated through the LSF batch scheduler;

similar to mpirun and aprun functionality.

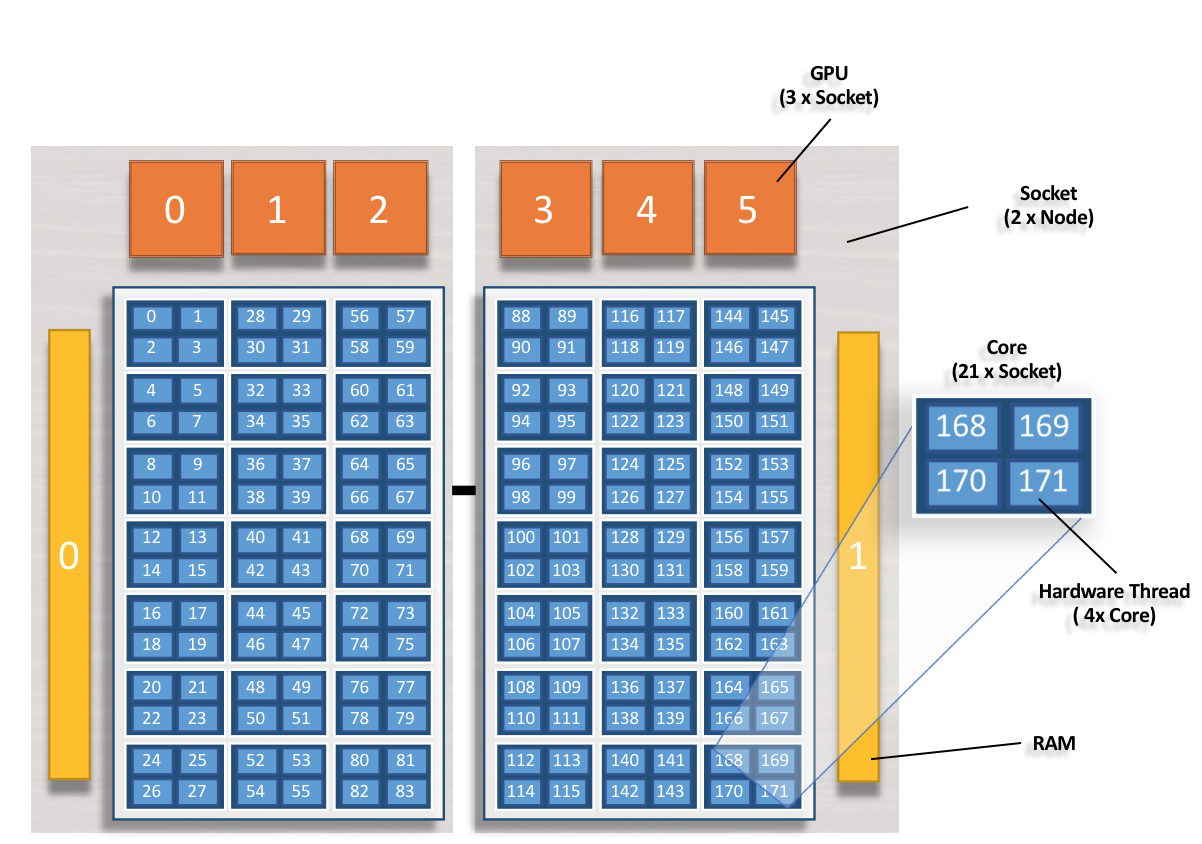

Compute Node Description

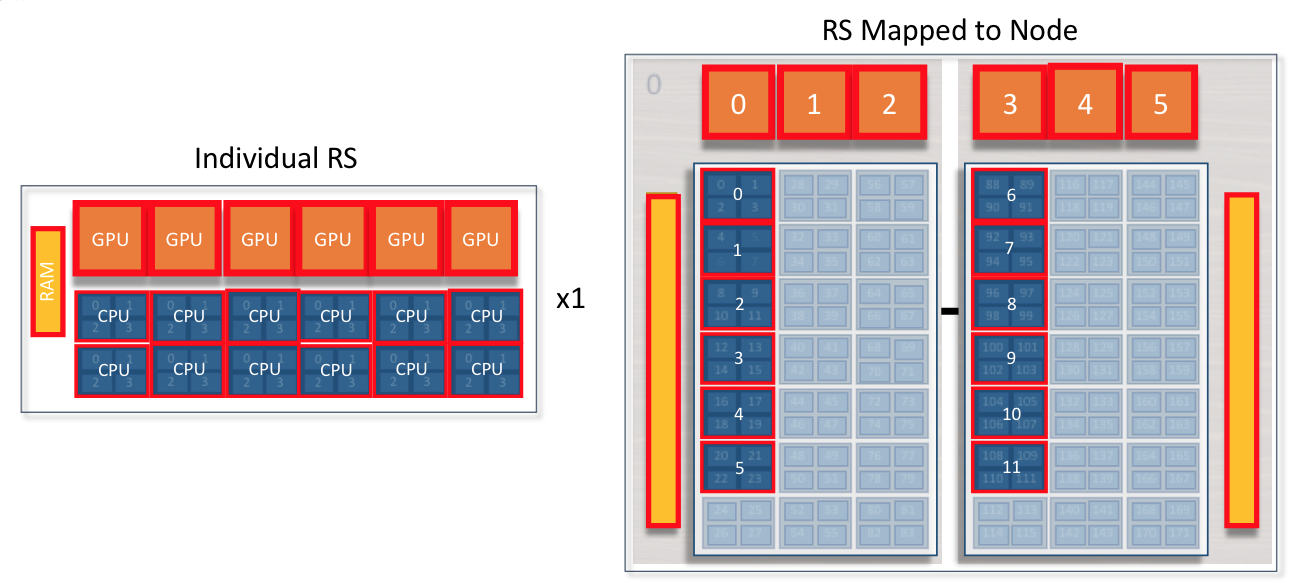

The following compute node image will be used to discuss jsrun resource sets and layout.

1 node

2 sockets (grey)

42 physical cores* (dark blue)

168 hardware cores (light blue)

6 GPUs (orange)

2 Memory blocks (yellow)

*Core Isolation: 1 core on each socket has been set aside for overhead and is not available for allocation through jsrun. The core has been omitted and is not shown in the above image.

Resource Sets

While jsrun performs similar job launching functions as aprun and

mpirun, its syntax is very different. A large reason for syntax

differences is the introduction of the resource set concept. Through

resource sets, jsrun can control how a node appears to each job. Users

can, through jsrun command line flags, control which resources on a node

are visible to a job. Resource sets also allow the ability to run

multiple jsruns simultaneously within a node. Under the covers, a

resource set is a cgroup.

At a high level, a resource set allows users to configure what a node look like to their job.

jsrun will create one or more resource sets within a node. Each resource set will contain 1 or more cores and 0 or more GPUs. A resource set can span sockets, but it may not span a node. While a resource set can span sockets within a node, consideration should be given to the cost of cross-socket communication. By creating resource sets only within sockets, costly communication between sockets can be prevented.

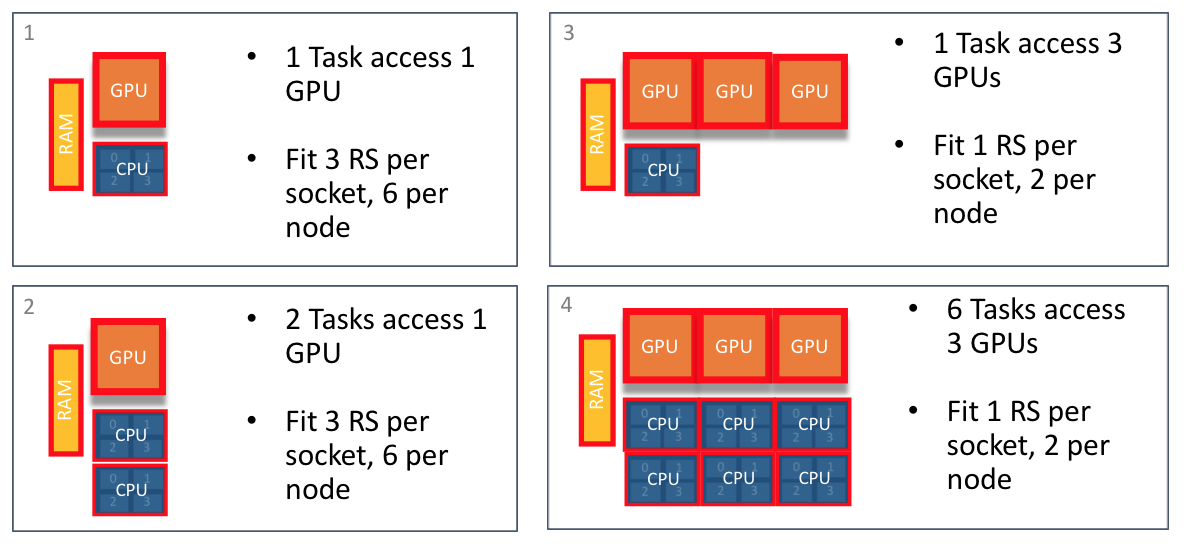

Subdividing a Node with Resource Sets

Resource sets provides the ability to subdivide node’s resources into smaller groups. The following examples show how a node can be subdivided and how many resource set could fit on a node.

Multiple Methods to Creating Resource Sets

Resource sets should be created to fit code requirements. The following examples show multiple ways to create resource sets that allow two MPI tasks access to a single GPU.

6 resource sets per node: 1 GPU, 2 cores per (Titan)

In this case, CPUs can only see single assigned GPU.

2 resource sets per node: 3 GPUs and 6 cores per socket

In this case, all 6 CPUs can see 3 GPUs. Code must manage CPU -> GPU communication. CPUs on socket0 can not access GPUs or Memory on socket1.

Single resource set per node: 6 GPUs, 12 cores

In this case, all 12 CPUs can see all node’s 6 GPUs. Code must manage CPU to GPU communication. CPUs on socket0 can access GPUs and Memory on socket1. Code must manage cross socket communication.

Designing a Resource Set

Resource sets allow each jsrun to control how the node appears to a code. This method is unique to jsrun, and requires thinking of each job launch differently than aprun or mpirun. While the method is unique, the method is not complicated and can be reasoned in a few basic steps.

The first step to creating resource sets is understanding how a code would like the node to appear. For example, the number of tasks/threads per GPU. Once this is understood, the next step is to simply calculate the number of resource sets that can fit on a node. From here, the number of needed nodes can be calculated and passed to the batch job request.

The basic steps to creating resource sets:

- Understand how your code expects to interact with the system.

How many tasks/threads per GPU?

Does each task expect to see a single GPU? Do multiple tasks expect to share a GPU? Is the code written to internally manage task to GPU workload based on the number of available cores and GPUs?

- Create resource sets containing the needed GPU to task binding

Based on how your code expects to interact with the system, you can create resource sets containing the needed GPU and core resources. If a code expects to utilize one GPU per task, a resource set would contain one core and one GPU. If a code expects to pass work to a single GPU from two tasks, a resource set would contain two cores and one GPU.

- Decide on the number of resource sets needed

Once you understand tasks, threads, and GPUs in a resource set, you simply need to decide the number of resource sets needed.

As on any system, it is useful to keep in mind the hardware underneath every execution. This is particularly true when laying out resource sets.

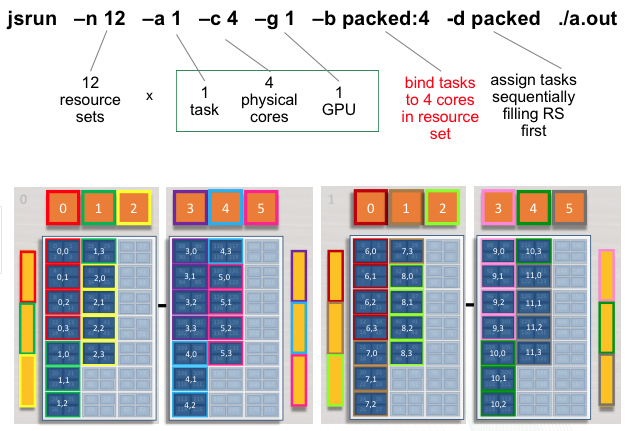

Launching a Job with jsrun

jsrun Format

jsrun [ -n #resource sets ] [tasks, threads, and GPUs within each resource set] program [ program args ]

Common jsrun Options

Below are common jsrun options. More flags and details can be found in the jsrun man page. The defaults listed in the table below are the OLCF defaults and take precedence over those mentioned in the man page.

Flags |

Description |

Default Value |

|

|---|---|---|---|

Long |

Short |

||

|

|

Number of resource sets |

All available physical cores |

|

|

Number of MPI tasks (ranks) per resource set |

Not set by default, instead total tasks (-p) set |

|

|

Number of CPUs (cores) per resource set. |

1 |

|

|

Number of GPUs per resource set |

0 |

|

|

Binding of tasks within a resource set. Can be none, rs, or packed:# |

packed:1 |

|

|

Number of resource sets per host |

No default |

|

|

Latency Priority. Controls layout priorities. Can currently be cpu-cpu or gpu-cpu |

gpu-cpu,cpu-mem,cpu-cpu |

|

|

How tasks are started on resource sets |

packed |

It’s recommended to explicitly specify jsrun options and not rely on the

default values. This most often includes --nrs,--cpu_per_rs,

--gpu_per_rs, --tasks_per_rs, --bind, and --launch_distribution.

Jsrun Examples

The below examples were launched in the following 2 node interactive batch job:

summit> bsub -nnodes 2 -Pprj123 -W02:00 -Is $SHELL

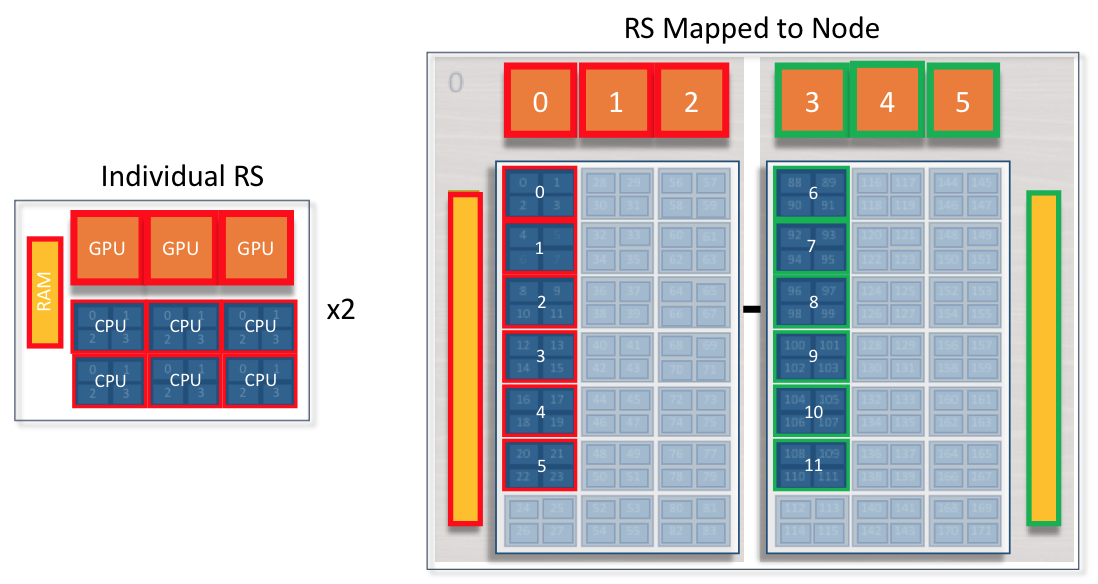

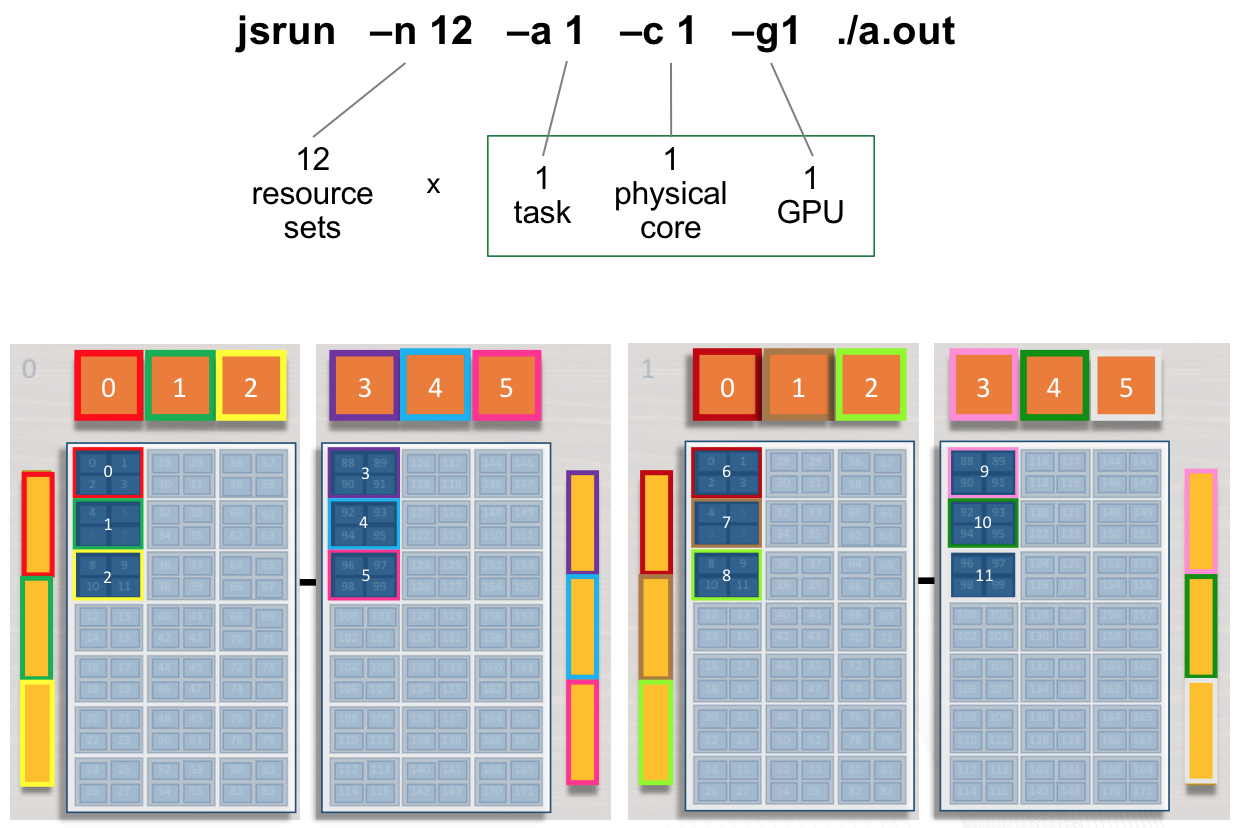

Single MPI Task, single GPU per RS

The following example will create 12 resource sets each with 1 MPI task and 1 GPU. Each MPI task will have access to a single GPU.

Rank 0 will have access to GPU 0 on the first node ( red resource set). Rank 1 will have access to GPU 1 on the first node ( green resource set). This pattern will continue until 12 resources sets have been created.

The following jsrun command will request 12 resource sets (-n12) 6

per node (-r6). Each resource set will contain 1 MPI task (-a1),

1 GPU (-g1), and 1 core (-c1).

summit> jsrun -n12 -r6 -a1 -g1 -c1 ./a.out

Rank: 0; NumRanks: 12; RankCore: 0; Hostname: h41n04; GPU: 0

Rank: 1; NumRanks: 12; RankCore: 4; Hostname: h41n04; GPU: 1

Rank: 2; NumRanks: 12; RankCore: 8; Hostname: h41n04; GPU: 2

Rank: 3; NumRanks: 12; RankCore: 88; Hostname: h41n04; GPU: 3

Rank: 4; NumRanks: 12; RankCore: 92; Hostname: h41n04; GPU: 4

Rank: 5; NumRanks: 12; RankCore: 96; Hostname: h41n04; GPU: 5

Rank: 6; NumRanks: 12; RankCore: 0; Hostname: h41n03; GPU: 0

Rank: 7; NumRanks: 12; RankCore: 4; Hostname: h41n03; GPU: 1

Rank: 8; NumRanks: 12; RankCore: 8; Hostname: h41n03; GPU: 2

Rank: 9; NumRanks: 12; RankCore: 88; Hostname: h41n03; GPU: 3

Rank: 10; NumRanks: 12; RankCore: 92; Hostname: h41n03; GPU: 4

Rank: 11; NumRanks: 12; RankCore: 96; Hostname: h41n03; GPU: 5

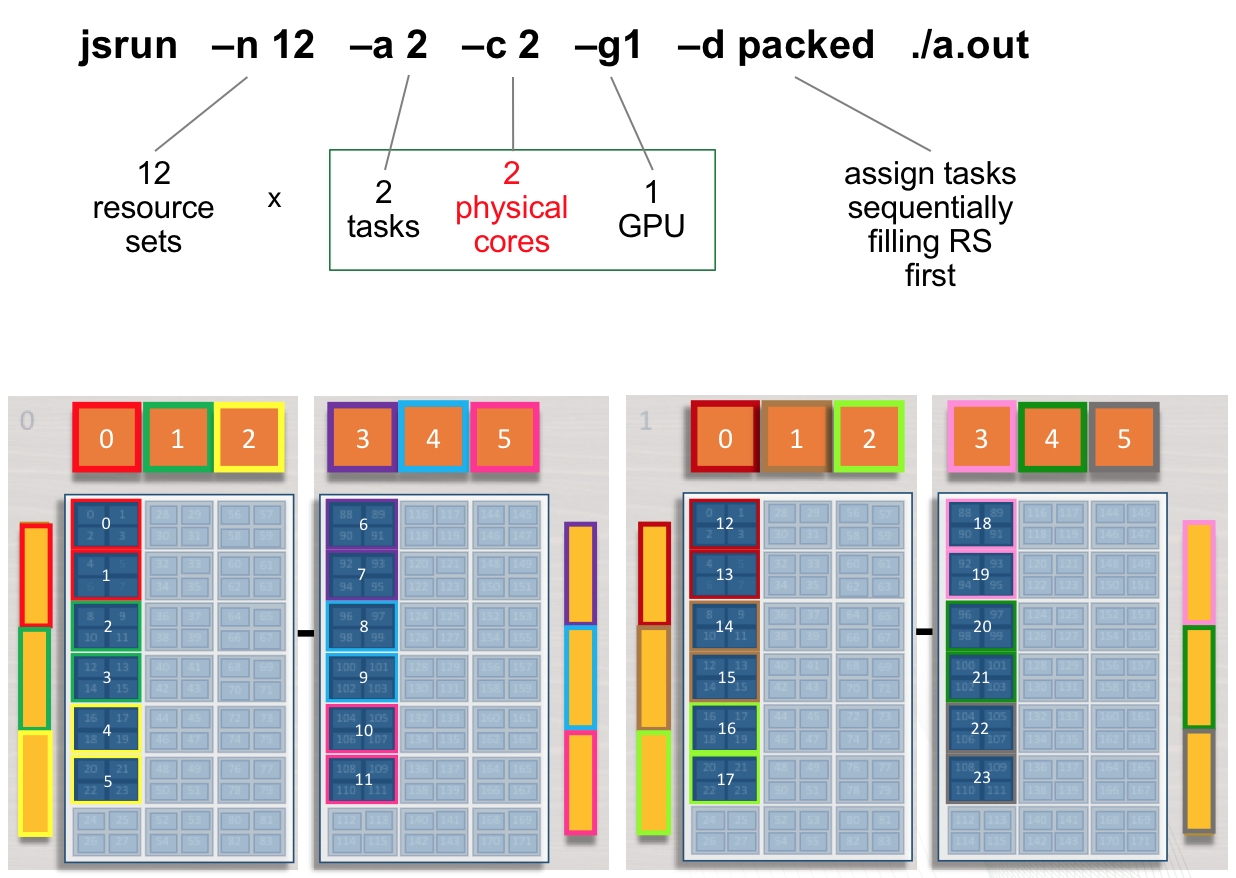

Multiple tasks, single GPU per RS

The following jsrun command will request 12 resource sets (-n12).

Each resource set will contain 2 MPI tasks (-a2), 1 GPU

(-g1), and 2 cores (-c2). 2 MPI tasks will have access to a

single GPU. Ranks 0 - 1 will have access to GPU 0 on the first node (

red resource set). Ranks 2 - 3 will have access to GPU 1 on the first

node ( green resource set). This pattern will continue until 12 resource

sets have been created.

Adding cores to the RS: The -c flag should be used to request

the needed cores for tasks and treads. The default -c core count is 1.

In the above example, if -c is not specified both tasks will run on a

single core.

summit> jsrun -n12 -a2 -g1 -c2 -dpacked ./a.out | sort

Rank: 0; NumRanks: 24; RankCore: 0; Hostname: a01n05; GPU: 0

Rank: 1; NumRanks: 24; RankCore: 4; Hostname: a01n05; GPU: 0

Rank: 2; NumRanks: 24; RankCore: 8; Hostname: a01n05; GPU: 1

Rank: 3; NumRanks: 24; RankCore: 12; Hostname: a01n05; GPU: 1

Rank: 4; NumRanks: 24; RankCore: 16; Hostname: a01n05; GPU: 2

Rank: 5; NumRanks: 24; RankCore: 20; Hostname: a01n05; GPU: 2

Rank: 6; NumRanks: 24; RankCore: 88; Hostname: a01n05; GPU: 3

Rank: 7; NumRanks: 24; RankCore: 92; Hostname: a01n05; GPU: 3

Rank: 8; NumRanks: 24; RankCore: 96; Hostname: a01n05; GPU: 4

Rank: 9; NumRanks: 24; RankCore: 100; Hostname: a01n05; GPU: 4

Rank: 10; NumRanks: 24; RankCore: 104; Hostname: a01n05; GPU: 5

Rank: 11; NumRanks: 24; RankCore: 108; Hostname: a01n05; GPU: 5

Rank: 12; NumRanks: 24; RankCore: 0; Hostname: a01n01; GPU: 0

Rank: 13; NumRanks: 24; RankCore: 4; Hostname: a01n01; GPU: 0

Rank: 14; NumRanks: 24; RankCore: 8; Hostname: a01n01; GPU: 1

Rank: 15; NumRanks: 24; RankCore: 12; Hostname: a01n01; GPU: 1

Rank: 16; NumRanks: 24; RankCore: 16; Hostname: a01n01; GPU: 2

Rank: 17; NumRanks: 24; RankCore: 20; Hostname: a01n01; GPU: 2

Rank: 18; NumRanks: 24; RankCore: 88; Hostname: a01n01; GPU: 3

Rank: 19; NumRanks: 24; RankCore: 92; Hostname: a01n01; GPU: 3

Rank: 20; NumRanks: 24; RankCore: 96; Hostname: a01n01; GPU: 4

Rank: 21; NumRanks: 24; RankCore: 100; Hostname: a01n01; GPU: 4

Rank: 22; NumRanks: 24; RankCore: 104; Hostname: a01n01; GPU: 5

Rank: 23; NumRanks: 24; RankCore: 108; Hostname: a01n01; GPU: 5

summit>

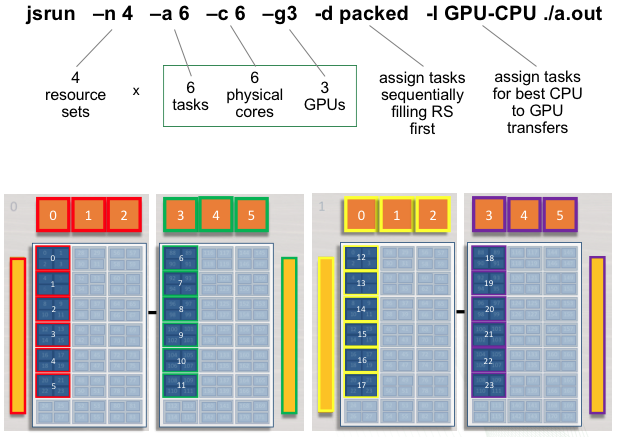

Multiple Task, Multiple GPU per RS

The following example will create 4 resource sets each with 6 tasks and

3 GPUs. Each set of 6 MPI tasks will have access to 3 GPUs. Ranks 0 - 5

will have access to GPUs 0 - 2 on the first socket of the first node (

red resource set). Ranks 6 - 11 will have access to GPUs 3 - 5 on the

second socket of the first node ( green resource set). This pattern will

continue until 4 resource sets have been created. The following jsrun

command will request 4 resource sets (-n4). Each resource set will

contain 6 MPI tasks (-a6), 3 GPUs (-g3), and 6 cores

(-c6).

summit> jsrun -n 4 -a 6 -c 6 -g 3 -d packed -l GPU-CPU ./a.out

Rank: 0; NumRanks: 24; RankCore: 0; Hostname: a33n06; GPU: 0, 1, 2

Rank: 1; NumRanks: 24; RankCore: 4; Hostname: a33n06; GPU: 0, 1, 2

Rank: 2; NumRanks: 24; RankCore: 8; Hostname: a33n06; GPU: 0, 1, 2

Rank: 3; NumRanks: 24; RankCore: 12; Hostname: a33n06; GPU: 0, 1, 2

Rank: 4; NumRanks: 24; RankCore: 16; Hostname: a33n06; GPU: 0, 1, 2

Rank: 5; NumRanks: 24; RankCore: 20; Hostname: a33n06; GPU: 0, 1, 2

Rank: 6; NumRanks: 24; RankCore: 88; Hostname: a33n06; GPU: 3, 4, 5

Rank: 7; NumRanks: 24; RankCore: 92; Hostname: a33n06; GPU: 3, 4, 5

Rank: 8; NumRanks: 24; RankCore: 96; Hostname: a33n06; GPU: 3, 4, 5

Rank: 9; NumRanks: 24; RankCore: 100; Hostname: a33n06; GPU: 3, 4, 5

Rank: 10; NumRanks: 24; RankCore: 104; Hostname: a33n06; GPU: 3, 4, 5

Rank: 11; NumRanks: 24; RankCore: 108; Hostname: a33n06; GPU: 3, 4, 5

Rank: 12; NumRanks: 24; RankCore: 0; Hostname: a33n05; GPU: 0, 1, 2

Rank: 13; NumRanks: 24; RankCore: 4; Hostname: a33n05; GPU: 0, 1, 2

Rank: 14; NumRanks: 24; RankCore: 8; Hostname: a33n05; GPU: 0, 1, 2

Rank: 15; NumRanks: 24; RankCore: 12; Hostname: a33n05; GPU: 0, 1, 2

Rank: 16; NumRanks: 24; RankCore: 16; Hostname: a33n05; GPU: 0, 1, 2

Rank: 17; NumRanks: 24; RankCore: 20; Hostname: a33n05; GPU: 0, 1, 2

Rank: 18; NumRanks: 24; RankCore: 88; Hostname: a33n05; GPU: 3, 4, 5

Rank: 19; NumRanks: 24; RankCore: 92; Hostname: a33n05; GPU: 3, 4, 5

Rank: 20; NumRanks: 24; RankCore: 96; Hostname: a33n05; GPU: 3, 4, 5

Rank: 21; NumRanks: 24; RankCore: 100; Hostname: a33n05; GPU: 3, 4, 5

Rank: 22; NumRanks: 24; RankCore: 104; Hostname: a33n05; GPU: 3, 4, 5

Rank: 23; NumRanks: 24; RankCore: 108; Hostname: a33n05; GPU: 3, 4, 5

summit>

Common Use Cases

The following table provides a quick reference for creating resource

sets of various common use cases. The -n flag can be altered to

specify the number of resource sets needed.

Resource Sets |

MPI Tasks |

Threads |

Physical Cores |

GPUs |

jsrun Command |

|---|---|---|---|---|---|

1 |

42 |

0 |

42 |

0 |

jsrun -n1 -a42 -c42 -g0 |

1 |

1 |

0 |

1 |

1 |

jsrun -n1 -a1 -c1 -g1 |

1 |

2 |

0 |

2 |

1 |

jsrun -n1 -a2 -c2 -g1 |

1 |

1 |

0 |

1 |

2 |

jsrun -n1 -a1 -c1 -g2 |

1 |

1 |

21 |

21 |

3 |

jsrun -n1 -a1 -c21 -g3 -bpacked:21 |

jsrun Tools

This section describes tools that users might find helpful to better understand the jsrun job launcher.

hello_jsrun

hello_jsrun is a “Hello World”-type program that users can run on Summit nodes to better understand how MPI ranks and OpenMP threads are mapped to the hardware. https://code.ornl.gov/t4p/Hello_jsrun A screencast showing how to use Hello_jsrun is also available: https://vimeo.com/261038849

Job Step Viewer

Job Step Viewer provides a graphical view of an application’s runtime layout on Summit.

It allows users to preview and quickly iterate with multiple jsrun options to

understand and optimize job launch.

For bug reports or suggestions, please email help@olcf.ornl.gov.

Usage

- Request a Summit allocation

bsub -W 10 -nnodes 2 -P $OLCF_PROJECT_ID -Is $SHELL

- Load the

job-step-viewermodule module load job-step-viewer

- Load the

- Test out a

jsrunline by itself, or provide an executable as normal jsrun -n12 -r6 -c7 -g1 -a1 -EOMP_NUM_THREADS=7 -brs

- Test out a

- Visit the provided URL

Note

Most Terminal applications have built-in shortcuts to directly open web addresses in the default browser.

MacOS Terminal.app: hold Command (⌘) and double-click on the URL

iTerm2: hold Command (⌘) and single-click on the URL

Limitations

(currently) Compiled with GCC toolchain only

Does not support MPMD-mode via ERF

OpenMP only supported with use of the

OMP_NUM_THREADSenvironment variable.

More Information

This section provides some of the most commonly used LSF commands as

well as some of the most useful options to those commands and

information on jsrun, Summit’s job launch command. Many commands

have much more information than can be easily presented here. More

information about these commands is available via the online manual

(i.e. man jsrun). Additional LSF information can be found on IBM’s

website.

Using Multithreading in a Job

Hardware Threads: Multiple Threads per Core

Each physical core on Summit contains 4 hardware threads. The SMT level can be set using LSF flags (the default is smt4):

SMT1

#BSUB -alloc_flags smt1

jsrun -n1 -c1 -a1 -bpacked:1 csh -c 'echo $OMP_PLACES’

0

SMT2

#BSUB -alloc_flags smt2

jsrun -n1 -c1 -a1 -bpacked:1 csh -c 'echo $OMP_PLACES’

{0:2}

SMT4

#BSUB -alloc_flags smt4

jsrun -n1 -c1 -a1 -bpacked:1 csh -c 'echo $OMP_PLACES’

{0:4}

Controlling Number of Threads for Tasks

In addition to specifying the SMT level, you can also control the

number of threads per MPI task by exporting the OMP_NUM_THREADS

environment variable. If you don’t export it yourself, Jsrun will

automatically set the number of threads based on the number of cores

requested (-c) and the binding (-b) option. It is better to be

explicit and set the OMP_NUM_THREADS value yourself rather than

relying on Jsrun constructing it for you. Especially when you are

using Job Step Viewer which relies on the presence of that

environment variable to give you visual thread assignment information.

In the below example, you could also do export OMP_NUM_THREADS=16 in your

job script instead of passing it as a -E flag to jsrun. The below example

starts 1 resource set with 2 tasks and 8 cores, 4 cores bound to each task,

16 threads for each task. We can set 16 threads since there are 4 cores

per task and the default is smt4 for each core (4 * 4 = 16 threads).

jsrun -n1 -a2 -c8 -g1 -bpacked:4 -dpacked -EOMP_NUM_THREADS=16 csh -c 'echo $OMP_NUM_THREADS $OMP_PLACES'

16 0:4,4:4,8:4,12:4

16 16:4,20:4,24:4,28:4

Be careful with assigning threads to tasks, as you might end up oversubscribing your cores. For example

jsrun -n1 -a2 -c8 -g1 -bpacked:4 -dpacked -EOMP_NUM_THREADS=32 csh -c 'echo $OMP_NUM_THREADS $OMP_PLACES'

Warning: OMP_NUM_THREADS=32 is greater than available PU's

Warning: OMP_NUM_THREADS=32 is greater than available PU's

Warning: OMP_NUM_THREADS=32 is greater than available PU's

Warning: OMP_NUM_THREADS=32 is greater than available PU's

32 16:4,20:4,24:4,28:4

32 0:4,4:4,8:4,12:4

You can use hello_jsrun or Job Step Viewer to see how the cores are being oversubscribed.

Because of how jsrun sets up OMP_NUM_THREADS based on -c and

-b options if you don’t specify the environment variable yourself,

you can accidentally end up oversubscribing your cores. For example

jsrun -n1 -a2 -c8 -g1 -brs -dpacked csh -c 'echo $OMP_NUM_THREADS $OMP_PLACES'

Warning: more than 1 task/rank assigned to a core

Warning: more than 1 task/rank assigned to a core

32 0:4,4:4,8:4,12:4,16:4,20:4,24:4,28:4

32 0:4,4:4,8:4,12:4,16:4,20:4,24:4,28:4

Because jsrun sees 8 cores and the -brs flag, it assigns all 8 cores to

each of the 2 tasks in the resource set. Jsrun will set up OMP_NUM_THREADS

as 32 (8 cores with 4 threads per core) which will apply to all the

tasks in the resource set. This means that each task sees that it can

have 32 threads (which means 64 threads for the 2 tasks combined) which

will oversubscribe the cores and may decrease efficiency as a result.

Example: Single Task, Single GPU, Multiple Threads per RS

The following example will create 12 resource sets each with 1 task, 4

threads, and 1 GPU. Each MPI task will start 4 threads and have access

to 1 GPU. Rank 0 will have access to GPU 0 and start 4 threads on the

first socket of the first node ( red resource set). Rank 2 will have

access to GPU 1 and start 4 threads on the second socket of the first

node ( green resource set). This pattern will continue until 12 resource

sets have been created. The following jsrun command will create 12

resource sets (-n12). Each resource set will contain 1 MPI task

(-a1), 1 GPU (-g1), and 4 cores (-c4). Notice that

more cores are requested than MPI tasks; the extra cores will be needed

to place threads. Without requesting additional cores, threads will be

placed on a single core.

Requesting Cores for Threads: The -c flag should be used to

request additional cores for thread placement. Without requesting

additional cores, threads will be placed on a single core.

Binding Cores to Tasks: The -b binding flag should be used to

bind cores to tasks. Without specifying binding, all threads will be

bound to the first core.

summit> setenv OMP_NUM_THREADS 4

summit> jsrun -n12 -a1 -c4 -g1 -b packed:4 -d packed ./a.out

Rank: 0; RankCore: 0; Thread: 0; ThreadCore: 0; Hostname: a33n06; OMP_NUM_PLACES: {0},{4},{8},{12}

Rank: 0; RankCore: 0; Thread: 1; ThreadCore: 4; Hostname: a33n06; OMP_NUM_PLACES: {0},{4},{8},{12}

Rank: 0; RankCore: 0; Thread: 2; ThreadCore: 8; Hostname: a33n06; OMP_NUM_PLACES: {0},{4},{8},{12}

Rank: 0; RankCore: 0; Thread: 3; ThreadCore: 12; Hostname: a33n06; OMP_NUM_PLACES: {0},{4},{8},{12}

Rank: 1; RankCore: 16; Thread: 0; ThreadCore: 16; Hostname: a33n06; OMP_NUM_PLACES: {16},{20},{24},{28}

Rank: 1; RankCore: 16; Thread: 1; ThreadCore: 20; Hostname: a33n06; OMP_NUM_PLACES: {16},{20},{24},{28}

Rank: 1; RankCore: 16; Thread: 2; ThreadCore: 24; Hostname: a33n06; OMP_NUM_PLACES: {16},{20},{24},{28}

Rank: 1; RankCore: 16; Thread: 3; ThreadCore: 28; Hostname: a33n06; OMP_NUM_PLACES: {16},{20},{24},{28}

...

Rank: 10; RankCore: 104; Thread: 0; ThreadCore: 104; Hostname: a33n05; OMP_NUM_PLACES: {104},{108},{112},{116}

Rank: 10; RankCore: 104; Thread: 1; ThreadCore: 108; Hostname: a33n05; OMP_NUM_PLACES: {104},{108},{112},{116}

Rank: 10; RankCore: 104; Thread: 2; ThreadCore: 112; Hostname: a33n05; OMP_NUM_PLACES: {104},{108},{112},{116}

Rank: 10; RankCore: 104; Thread: 3; ThreadCore: 116; Hostname: a33n05; OMP_NUM_PLACES: {104},{108},{112},{116}

Rank: 11; RankCore: 120; Thread: 0; ThreadCore: 120; Hostname: a33n05; OMP_NUM_PLACES: {120},{124},{128},{132}

Rank: 11; RankCore: 120; Thread: 1; ThreadCore: 124; Hostname: a33n05; OMP_NUM_PLACES: {120},{124},{128},{132}

Rank: 11; RankCore: 120; Thread: 2; ThreadCore: 128; Hostname: a33n05; OMP_NUM_PLACES: {120},{124},{128},{132}

Rank: 11; RankCore: 120; Thread: 3; ThreadCore: 132; Hostname: a33n05; OMP_NUM_PLACES: {120},{124},{128},{132}

summit>

Launching Multiple Jsruns

Jsrun provides the ability to launch multiple jsrun job launches within a

single batch job allocation. This can be done within a single node, or across

multiple nodes.

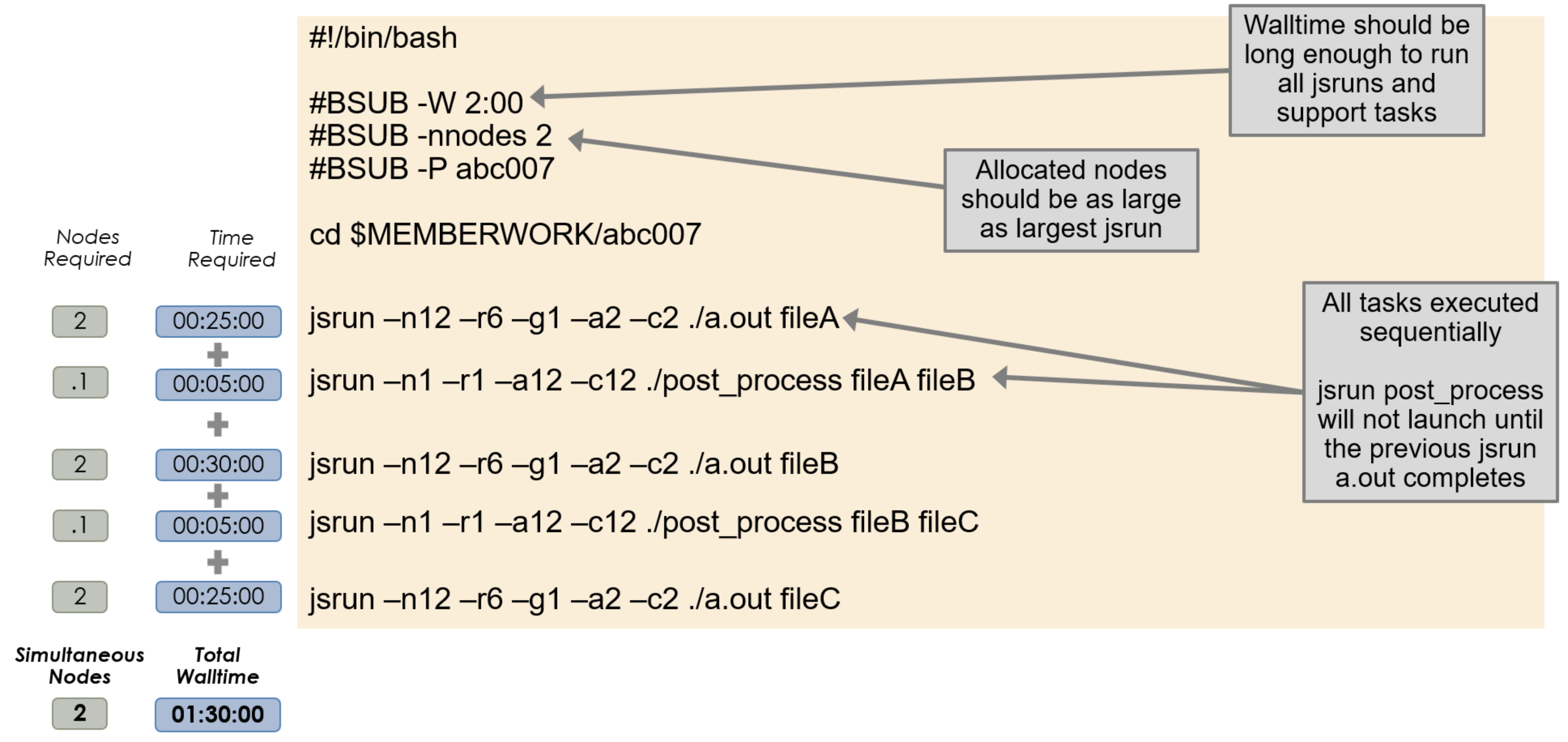

Sequential Job Steps

By default, multiple invocations of jsrun in a job script will execute

serially in order. In this configuration, jobs will launch one at a time and

the next one will not start until the previous is complete. The batch node

allocation is equal to the largest jsrun submitted, and the total walltime

must be equal to or greater then the sum of all jsruns issued.

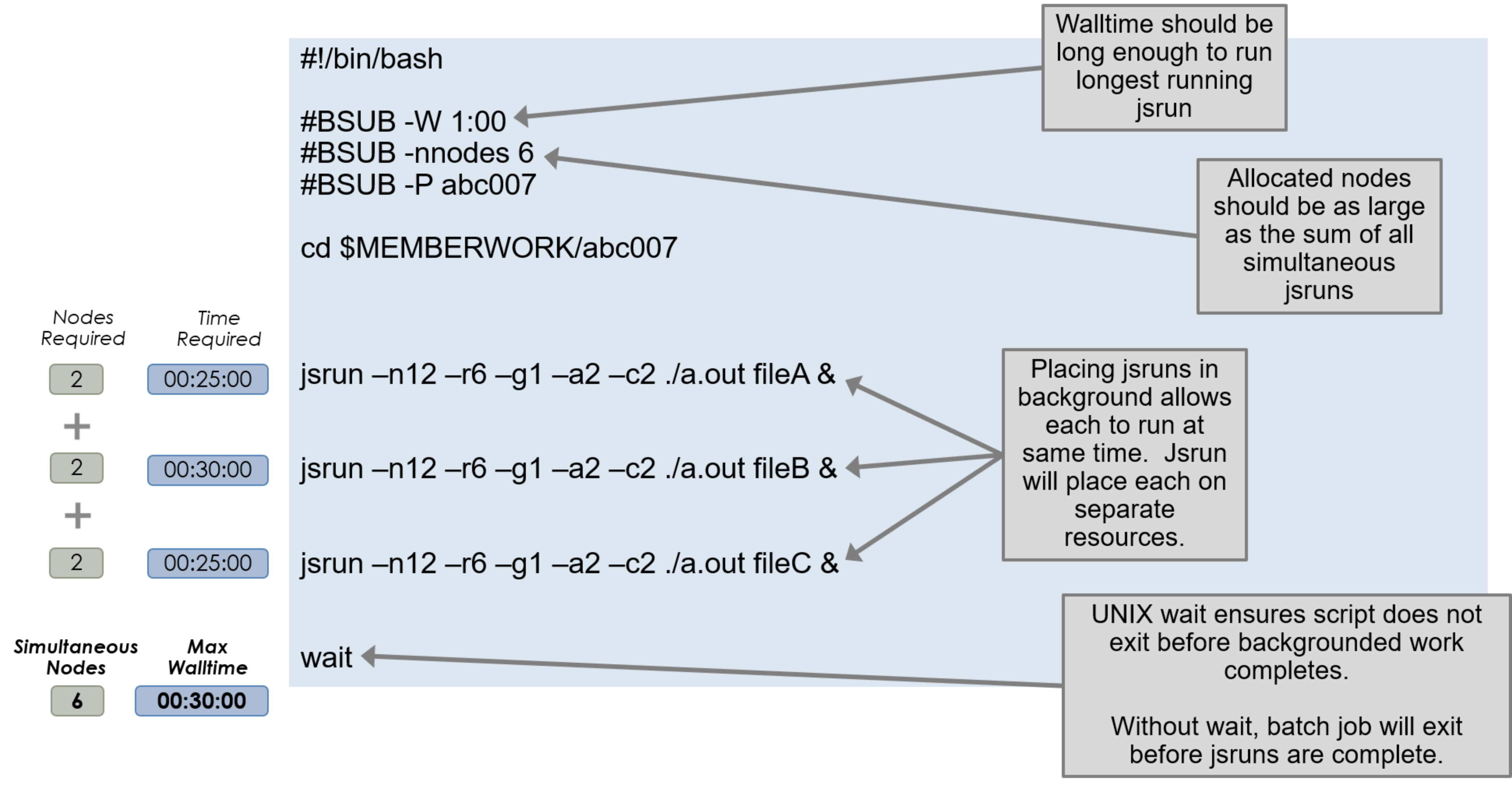

Simultaneous Job Steps

To execute multiple job steps concurrently, standard UNIX process

backgrounding can be used by adding a & at the end of the command. This

will return control to the job script and execute the next command immediately,

allowing multiple job launches to start at the same time. The jsruns will not

share core/gpu resources in this configuration. The batch node allocation is

equal to the sum of those of each jsrun, and the total walltime must be equal

to or greater than that of the longest running jsrun task.

A wait command must follow all backgrounded processes to prevent the job

from appearing completed and exiting prematurely.

The following example executes three backgrounded job steps and waits for them to finish before the job ends.

#!/bin/bash

#BSUB -P ABC123

#BSUB -W 3:00

#BSUB -nnodes 1

#BSUB -J RunSim123

#BSUB -o RunSim123.%J

#BSUB -e RunSim123.%J

cd $MEMBERWORK/abc123

jsrun <options> ./a.out &

jsrun <options> ./a.out &

jsrun <options> ./a.out &

wait

As submission scripts (and interactive sessions) are executed on batch nodes, the number of concurrent job steps is limited by the per-user process limit on a batch node, where a single user is only permitted 4096 simultaneous processes. This limit is per user on each batch node, not per batch job.

Each job step will create 3 processes, and JSM management may create up to ~23 processes. This creates an upper-limit of ~1350 simultaneous job steps.

If JSM or PMIX errors occur as the result of backgrounding many job steps, using the

--immediate option to jsrun may help, as shown in the following example.

#!/bin/bash

#BSUB -P ABC123

#BSUB -W 3:00

#BSUB -nnodes 1

#BSUB -J RunSim123

#BSUB -o RunSim123.%J

#BSUB -e RunSim123.%J

cd $MEMBERWORK/abc123

jsrun <options> --immediate ./a.out

jsrun <options> --immediate ./a.out

jsrun <options> --immediate ./a.out

Note

By default, jsrun --immediate does not produce stdout or

stderr. To capture stdout and/or stderr when using this option,

additionally include --stdio_stdout/-o and/or

--stdio_stderr/-k.

Using jslist

To view the status of multiple jobs launched sequentially or concurrently within a batch script, you can use jslist to see which are completed, running, or still queued. If you are using it outside of an interactive batch job, use the -c option to specify the CSM allocation ID number. The following example shows how to obtain the CSM allocation number for a non interactive job and then check its status.

$ bsub test.lsf

Job <26238> is submitted to default queue <batch>.

$ bjobs -l 26238 | grep CSM_ALLOCATION_ID

Sun Feb 16 19:01:18: CSM_ALLOCATION_ID=34435

$ jslist -c 34435

parent cpus gpus exit

ID ID nrs per RS per RS status status

===========================================================

1 0 12 4 1 0 Running

Explicit Resource Files (ERF)

Explicit Resource Files provide even more fine-granied control over how processes are mapped onto compute nodes. ERFs can define job step options such as rank placement/binding, SMT/CPU/GPU resources, compute hosts, among many others. If you find that the most common jsrun options do not readily provide the resource layout you need, we recommend considering ERF files.

A common source of confusion when using ERFs is how physical cores are

enumerated. See the tutorial on ERF CPU

Indexing for a

discussion of the cpu_index_using control and its interaction with various

SMT modes.

Note

Please note, a known bug is currently preventing execution of most ERF use cases. We are working to resolve the issue.

CUDA-Aware MPI

CUDA-aware MPI and GPUDirect are often used interchangeably, but they are distinct topics.

CUDA-aware MPI allows GPU buffers (e.g., GPU memory allocated with

cudaMalloc) to be used directly in MPI calls rather than requiring

data to be manually transferred to/from a CPU buffer (e.g., using

cudaMemcpy) before/after passing data in MPI calls. By itself,

CUDA-aware MPI does not specify whether data is staged through

CPU memory or, for example, transferred directly between GPUs when

passing GPU buffers to MPI calls. That is where GPUDirect comes in.

GPUDirect is a technology that can be implemented on a system to enhance

CUDA-aware MPI by allowing data transfers directly between GPUs on the

same node (peer-to-peer) and/or directly between GPUs on different nodes

(with RDMA support) without the need to stage data through CPU memory.

On Summit, both peer-to-peer and RDMA support are implemented. To enable

CUDA-aware MPI in a job, use the following argument to jsrun:

jsrun --smpiargs="-gpu" ...

Not using the --smpiargs="-gpu" flag might result in confusing segmentation

faults. If you see a segmentation fault when trying to do GPU aware MPI, check to

see if you have the flag set correctly.

Monitoring Jobs

LSF provides several utilities with which you can monitor jobs. These include monitoring the queue, getting details about a particular job, viewing STDOUT/STDERR of running jobs, and more.

The most straightforward monitoring is with the bjobs command. This

command will show the current queue, including both pending and running

jobs. Running bjobs -l will provide much more detail about a job (or

group of jobs). For detailed output of a single job, specify the job id

after the -l. For example, for detailed output of job 12345, you can

run bjobs -l 12345 . Other options to bjobs are shown below. In

general, if the command is specified with -u all it will show

information for all users/all jobs. Without that option, it only shows

your jobs. Note that this is not an exhaustive list. See man bjobs

for more information.

Command |

Description |

|---|---|

|

Show your current jobs in the queue |

|

Show currently queued jobs for all users |

|

Shows currently-queued jobs for project ABC123 |

|

Don’t format output (might be useful if you’re using the output in a script) |

|

Show jobs in all states, including recently finished jobs |

|

Show long/detailed output |

|

Show long/detailed output for jobs 12345 |

|

Show details for recently completed jobs |

|

Show suspended jobs, including the reason(s) they’re suspended |

|

Show running jobs |

|

Show pending jobs |

|

Use “wide” formatting for output |

If you want to check the STDOUT/STDERR of a currently running job, you

can do so with the bpeek command. The command supports several

options:

Command |

Description |

|---|---|

|

Show STDOUT/STDERR for the job you’ve most recently submitted with the name jobname |

|

Show STDOUT/STDERR for job 12345 |

|

Used with other options. Makes |

The OLCF also provides jobstat, which adds dividers in the queue to

identify jobs as running, eligible, or blocked. Run without arguments,

jobstat provides a snapshot of the entire batch queue. Additional

information, including the number of jobs in each state, total nodes

available, and relative job priority are also included.

jobstat -u <username> restricts output to only the jobs of a