Frontier User Guide

System Overview

Frontier is a HPE Cray EX supercomputer located at the Oak Ridge Leadership Computing Facility. With a theoretical peak double-precision performance of approximately 2 exaflops (2 quintillion calculations per second), it is the fastest system in the world for a wide range of traditional computational science applications. The system has 77 Olympus rack HPE cabinets, each with 128 AMD compute nodes, and a total of 9,856 AMD compute nodes.

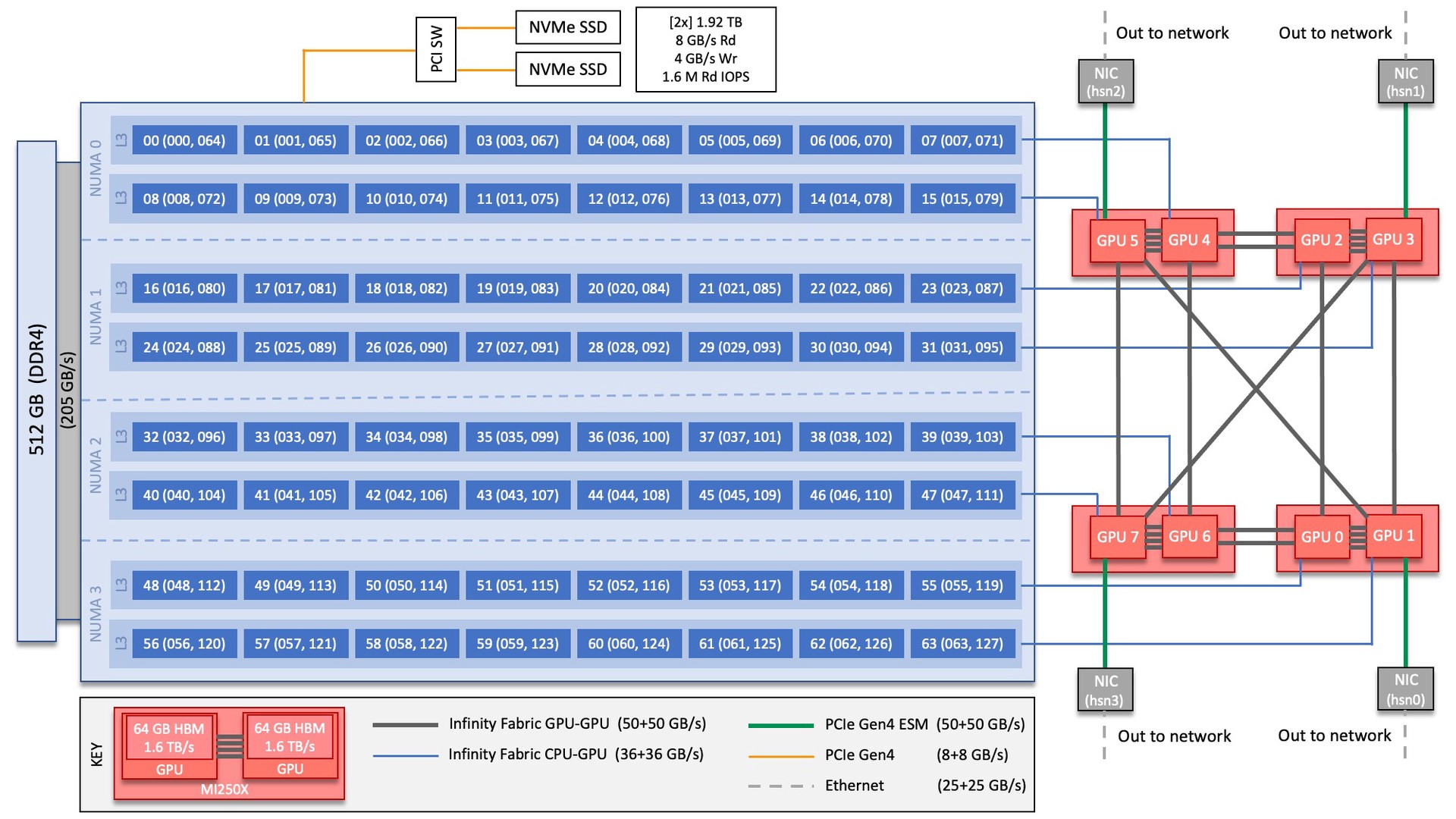

Frontier Compute Nodes

Each Frontier compute node consists of [1x] 64-core AMD “Optimized 3rd Gen EPYC” CPU (with 2 hardware threads per physical core) with access to 512 GB of DDR4 memory. Each node also contains [4x] AMD MI250X, each with 2 Graphics Compute Dies (GCDs) for a total of 8 GCDs per node. The programmer can think of the 8 GCDs as 8 separate GPUs, each having 64 GB of high-bandwidth memory (HBM2E). The CPU is connected to each GCD via Infinity Fabric CPU-GPU, allowing a peak host-to-device (H2D) and device-to-host (D2H) bandwidth of 36+36 GB/s. The 2 GCDs on the same MI250X are connected with Infinity Fabric GPU-GPU with a peak bandwidth of 200 GB/s. The GCDs on different MI250X are connected with Infinity Fabric GPU-GPU in the arrangement shown in the Frontier Node Diagram below, where the peak bandwidth ranges from 50-100 GB/s based on the number of Infinity Fabric connections between individual GCDs.

Note

TERMINOLOGY:

The 8 GCDs contained in the 4 MI250X will show as 8 separate GPUs according to Slurm, ROCR_VISIBLE_DEVICES, and the ROCr runtime, so from this point forward in the quick-start guide, we will simply refer to the GCDs as GPUs.

Note

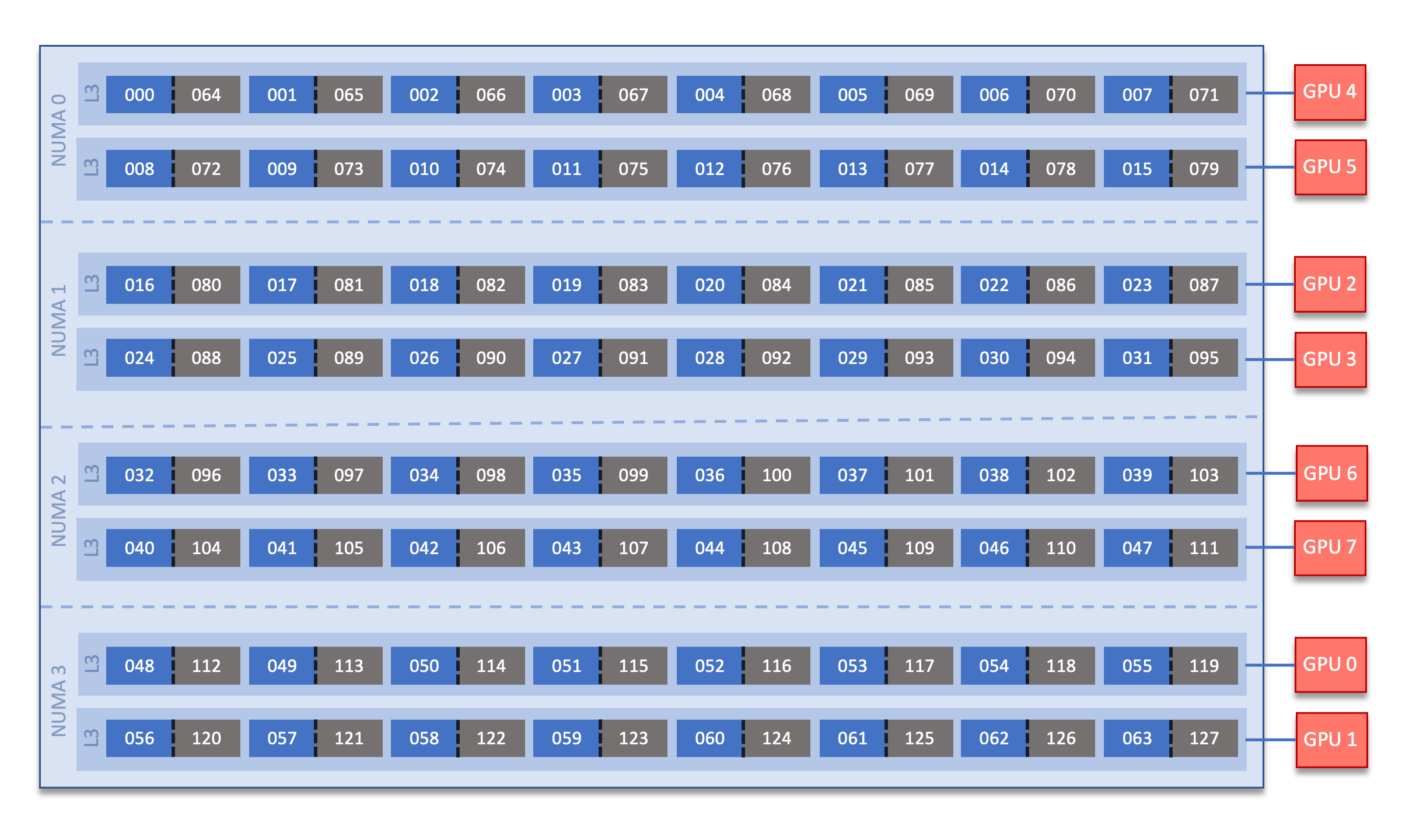

There are [4x] NUMA domains per node and [2x] L3 cache regions per NUMA for a total of [8x] L3 cache regions. The 8 GPUs are each associated with one of the L3 regions as follows:

NUMA 0:

hardware threads 000-007, 064-071 | GPU 4

hardware threads 008-015, 072-079 | GPU 5

NUMA 1:

hardware threads 016-023, 080-087 | GPU 2

hardware threads 024-031, 088-095 | GPU 3

NUMA 2:

hardware threads 032-039, 096-103 | GPU 6

hardware threads 040-047, 104-111 | GPU 7

NUMA 3:

hardware threads 048-055, 112-119 | GPU 0

hardware threads 056-063, 120-127 | GPU 1

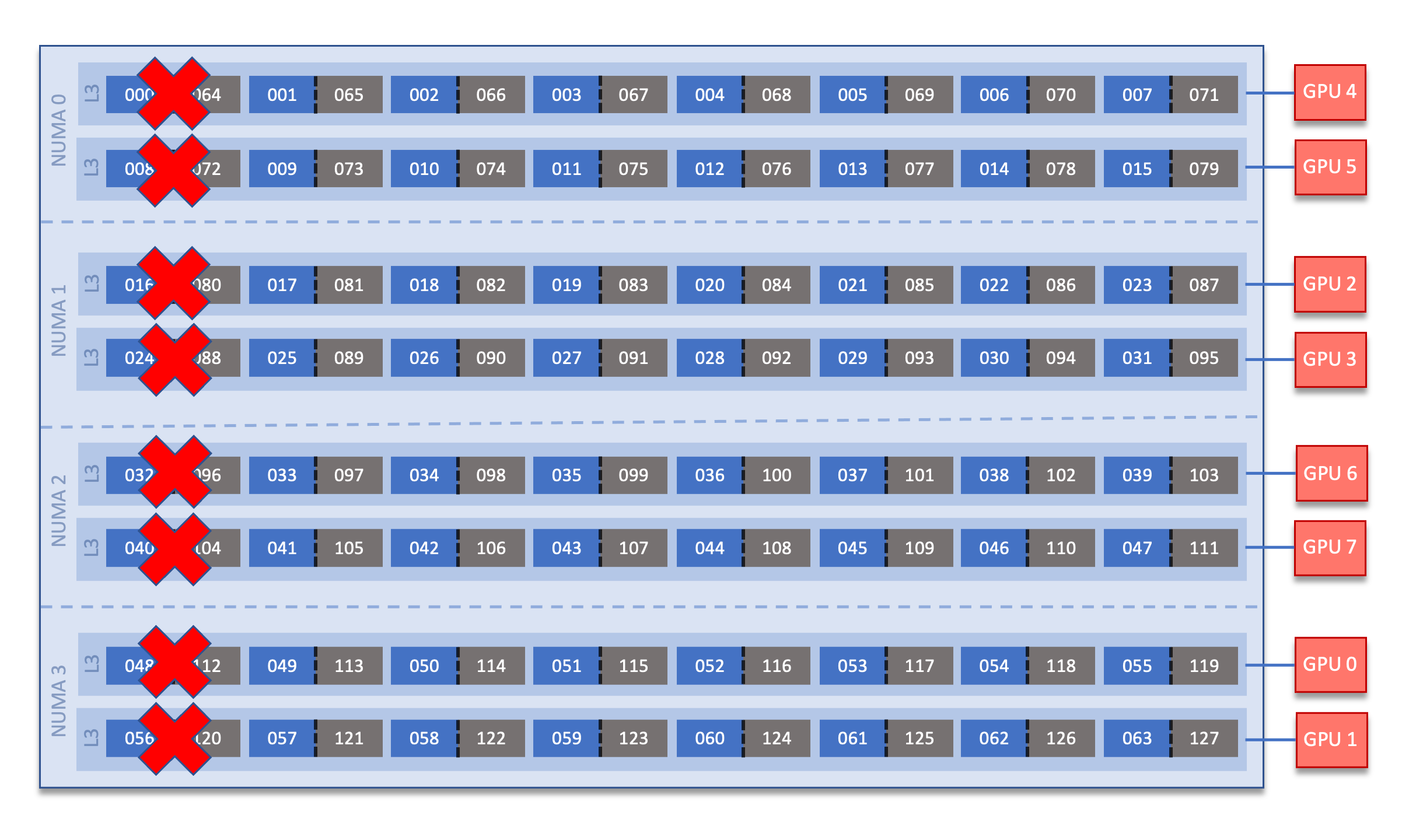

By default, Frontier reserves the first core in each L3 cache region. Frontier uses low-noise mode,

which constrains all system processes to core 0. Low-noise mode cannot be disabled by users.

In addition, Frontier uses SLURM core specialization (-S 8 flag at job allocation time, e.g., sbatch)

to reserve one core from each L3 cache region, leaving 56 allocatable cores. Set -S 0 at job allocation to override this setting.

Node Types

On Frontier, there are two major types of nodes you will encounter: Login and Compute. While these are similar in terms of hardware (see: Frontier Compute Nodes), they differ considerably in their intended use.

Node Type |

Description |

|---|---|

Login |

When you connect to Frontier, you’re placed on a login node. This is the place to write/edit/compile your code, manage data, submit jobs, etc. You should never launch parallel jobs from a login node nor should you run threaded jobs on a login node. Login nodes are shared resources that are in use by many users simultaneously. |

Compute |

Most of the nodes on Frontier are compute nodes. These are where

your parallel job executes. They’re accessed via the |

System Interconnect

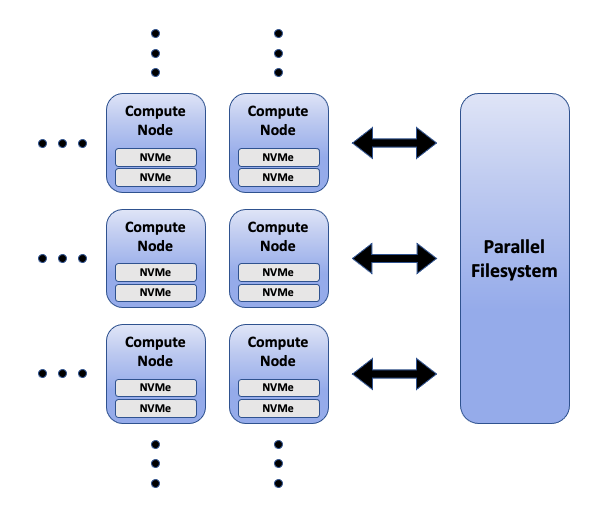

The Frontier nodes are connected with [4x] HPE Slingshot 200 Gbps (25 GB/s) NICs providing a node-injection bandwidth of 800 Gbps (100 GB/s).

File Systems

Frontier is connected to Orion, a parallel filesystem based on Lustre and HPE ClusterStor, with a 679 PB usable

namespace (/lustre/orion/). In addition to Frontier, Orion is available on the OLCF’s data transfer nodes and on the Andes cluster.

Frontier also has access to the center-wide NFS-based filesystem (which provides user and project home areas).

Each compute node has two 1.92TB Non-Volatile Memory storage devices. See Data and Storage for more information.

Project’s with a Frontier allocation also receive an archival storage area on Kronos. For more information on using Kronos, see the Kronos Nearline Archival Storage System seciton.

Operating System

Frontier is running Cray OS 2.4 based on SUSE Linux Enterprise Server (SLES) version 15.4.

GPUs

Each Frontier compute node contains 4 AMD MI250X. The AMD MI250X has a peak performance of 47.8 TFLOPS in vector-based double-precision for modeling and simulation. Each MI250X contains 2 GPUs, where each GPU has a peak performance of 23.9 TFLOPS (vector-based double-precision), 110 compute units, and 64 GB of high-bandwidth memory (HBM2) which can be accessed at a peak of 1.6 TB/s. The 2 GPUs on an MI250X are connected with Infinity Fabric with a bandwidth of 200 GB/s (in each direction simultaneously).

Connecting

To connect to Frontier, ssh to frontier.olcf.ornl.gov. For example:

$ ssh <username>@frontier.olcf.ornl.gov

For more information on connecting to OLCF resources, see Connecting for the first time.

By default, connecting to Frontier will automatically place the user on a random login node. If you need to access a specific login node, you will ssh to that node after your intial connection to Frontier.

[<username>@login12.frontier ~]$ ssh <username>@login01.frontier.olcf.ornl.gov

Users can connect to any of the 17 Frontier login nodes by replacing login01 with their login node of choice.

Data and Storage

Transition from Alpine to Orion

Frontier mounts Orion, a parallel filesystem based on Lustre and HPE ClusterStor, with a 679 PB usable namespace (/lustre/orion/). In addition to Frontier, Orion is available on the OLCF’s data transfer nodes.

On Alpine, there was no user-exposed concept of file striping, the process of dividing a file between the storage elements of the filesystem. Orion uses a feature called Progressive File Layout (PFL) that changes the striping of files as they grow. Because of this, we ask users not to manually adjust the file striping. If you feel the default striping behavior of Orion is not meeting your needs, please contact help@olcf.ornl.gov.

As with Alpine, files older than 90 days are purged from Orion. Please plan your data management and lifecycle at OLCF before generating the data.

For more detailed information about center-wide file systems and data archiving available on Frontier, please refer to the pages on Data Storage and Transfers. The subsections below give a quick overview of NFS, Lustre, and archival storage spaces as well as the on node NVMe “Burst Buffers” (SSDs).

LFS setstripe wrapper

The OLCF provides a wrapper for the lfs setstripe command that simplifies the process of striping files. The wrapper will enforce that certain settings are used to ensure that striping is done correctly. This will help to ensure good performance for users as well as prevent filesystem issues that could arise from incorrect striping practices. The wrapper is accessible via the lfs-wrapper module and will soon be added to the default environment on Frontier.

Orion is different than other Lustre filesystems that you may have used previously. To make effective use of Orion and to help ensure that the filesystem performs well for all users, it is important that you do the following:

Use the capacity OST pool tier (e.g.,

lfs setstripe -p capacity)Stripe across no more than 450 OSTs (e.g.,

lfs setstripe -c<= 450)

When the module is active in your environment, the wrapper will enforce the above settings. The wrapper will also do the following:

If a user provides a stripe count of -1 (e.g.,

lfs setstripe -c -1) the wrapper will set the stripe count to the maximum allowed by the filesystem (currently 450)If a user provides a stripe count of 0 (e.g.,

lfs setstripe -c 0) the wrapper will use the OLCF default striping command which has been optimized by the OLCF filesystem managers:lfs setstripe -E 256K -L mdt -E 8M -c 1 -S 1M -p performance -z 64M -E 128G -c 1 -S 1M -z 16G -p capacity -E -1 -z 256G -c 8 -S 1M -p capacity

Please contact the OLCF User Assistance Center if you have any questions about using the wrapper or if you encounter any issues.

NFS Filesystem

Area |

Path |

Type |

Permissions |

Quota |

Backups |

Purged |

Retention |

On Compute Nodes |

|---|---|---|---|---|---|---|---|---|

User Home |

|

NFS |

User set |

50 GB |

Yes |

No |

90 days |

Yes |

Project Home |

|

NFS |

770 |

50 GB |

Yes |

No |

90 days |

Yes |

Note

Though the NFS filesystem’s User Home and Project Home areas are read/write from Frontier’s compute nodes, we strongly recommend that users launch and run jobs from the Lustre Orion parallel filesystem instead due to its larger storage capacity and superior performance. Please see below for Lustre Orion filesystem storage areas and paths.

Lustre Filesystem

Area |

Path |

Type |

Permissions |

Quota |

Backups |

Purged |

Retention |

On Compute Nodes |

|---|---|---|---|---|---|---|---|---|

Member Work |

|

Lustre HPE ClusterStor |

700 |

50 TB |

No |

90 days |

N/A |

Yes |

Project Work |

|

Lustre HPE ClusterStor |

770 |

50 TB |

No |

90 days |

N/A |

Yes |

World Work |

|

Lustre HPE ClusterStor |

775 |

50 TB |

No |

90 days |

N/A |

Yes |

Kronos Archival Storage

Please note that the Kronos is not mounted directly onto Frontier nodes. There are two main methods for accessing and moving data to/from Kronos, either with standard cli utilities (scp, rsync, etc.) and via Globus using the “OLCF Kronos” collection. For more information on using Kronos, see the Kronos Nearline Archival Storage System section.

Area |

Path |

Type |

Permissions |

Quota |

Backups |

Purged |

Retention |

On Compute Nodes |

|---|---|---|---|---|---|---|---|---|

Member Archive |

|

Nearline |

700 |

200 TB* |

No |

No |

90 days |

No |

Project Archive |

|

Nearline |

770 |

200 TB* |

No |

No |

90 days |

No |

World Archive |

|

Nearline |

775 |

200 TB* |

No |

No |

90 days |

No |

Note

The three archival storage areas above share a single 200TB per project quota.

NVMe

Each compute node on Frontier has [2x] 1.92TB Non-Volatile Memory (NVMe) storage devices (SSDs), colloquially known as a “Burst Buffer” with a peak sequential performance of 5500 MB/s (read) and 2000 MB/s (write). The purpose of the Burst Buffer system is to bring improved I/O performance to appropriate workloads. Users are not required to use the NVMes. Data can also be written directly to the parallel filesystem.

The NVMes on Frontier are local to each node.

NVMe Usage

To use the NVMe, users must request access during job allocation using the -C nvme option to sbatch, salloc, or srun. Once the devices have been granted to a job, users can access them at /mnt/bb/<userid>. Users are responsible for moving data to/from the NVMe before/after their jobs. Here is a simple example script:

#!/bin/bash

#SBATCH -A <projid>

#SBATCH -J nvme_test

#SBATCH -o %x-%j.out

#SBATCH -t 00:05:00

#SBATCH -p batch

#SBATCH -N 1

#SBATCH -C nvme

date

# Change directory to user scratch space (GPFS)

cd /gpfs/alpine/<projid>/scratch/<userid>

echo " "

echo "*****ORIGINAL FILE*****"

cat test.txt

echo "***********************"

# Move file from GPFS to SSD

mv test.txt /mnt/bb/<userid>

# Edit file from compute node

srun -n1 hostname >> /mnt/bb/<userid>/test.txt

# Move file from SSD back to GPFS

mv /mnt/bb/<userid>/test.txt .

echo " "

echo "*****UPDATED FILE******"

cat test.txt

echo "***********************"

And here is the output from the script:

$ cat nvme_test-<jobid>.out

*****ORIGINAL FILE*****

This is my file. There are many like it but this one is mine.

***********************

*****UPDATED FILE******

This is my file. There are many like it but this one is mine.

frontier0123

***********************

Using Globus to Move Data to and from Orion

Note

After January 8, the Globus v4 collections will no longer be supported. Please use the OLCF Kronos and OLCF DTN (Globus 5) collections.

The following example is intended to help users move data to and from the Orion filesystem.

Below is a summary of the steps for data transfer using Globus:

1. Login to globus.org using your globus ID and password. If you do not have a globusID, set one up here: Generate a globusID.

Once you are logged in, Globus will open the “File Manager” page. Click the left side “Collection” text field in the File Manager and type “OLCF DTN (Globus 5)”.

When prompted, authenticate into the OLCF DTN (Globus 5) collection using your OLCF username and PIN followed by your RSA passcode.

Click in the left side “Path” box in the File Manager and enter the path to your data on Orion. For example,`/lustre/orion/stf007/proj- shared/my_orion_data`. You should see a list of your files and folders under the left “Path” Box.

Click on all files or folders that you want to transfer in the list. This will highlight them.

Click on the right side “Collection” box in the File Manager and type the name of a second collection at OLCF or at another institution. You can transfer data between different paths on the Orion filesystem with this method too; Just use the OLCF DTN (Globus 5) collection again in the right side “Collection” box.

Click in the right side “Path” box and enter the path where you want to put your data on the second collection’s filesystem.

Click the left “Start” button.

Click on “Activity“ in the left blue menu bar to monitor your transfer. Globus will send you an email when the transfer is complete.

Globus Warnings:

Globus transfers do not preserve file permissions. Arriving files will have (rw-r–r–) permissions, meaning arriving files will have user read and write permissions and group and world read permissions. Note that the arriving files will not have any execute permissions, so you will need to use chmod to reset execute permissions before running a Globus-transferred executable.

Globus will overwrite files at the destination with identically named source files. This is done without warning.

Globus has restriction of 8 active transfers across all the users. Each user has a limit of 3 active transfers, so it is required to transfer a lot of data on each transfer than less data across many transfers.

If a folder is constituted with mixed files including thousands of small files (less than 1MB each one), it would be better to tar the smallfiles. Otherwise, if the files are larger, Globus will handle them.

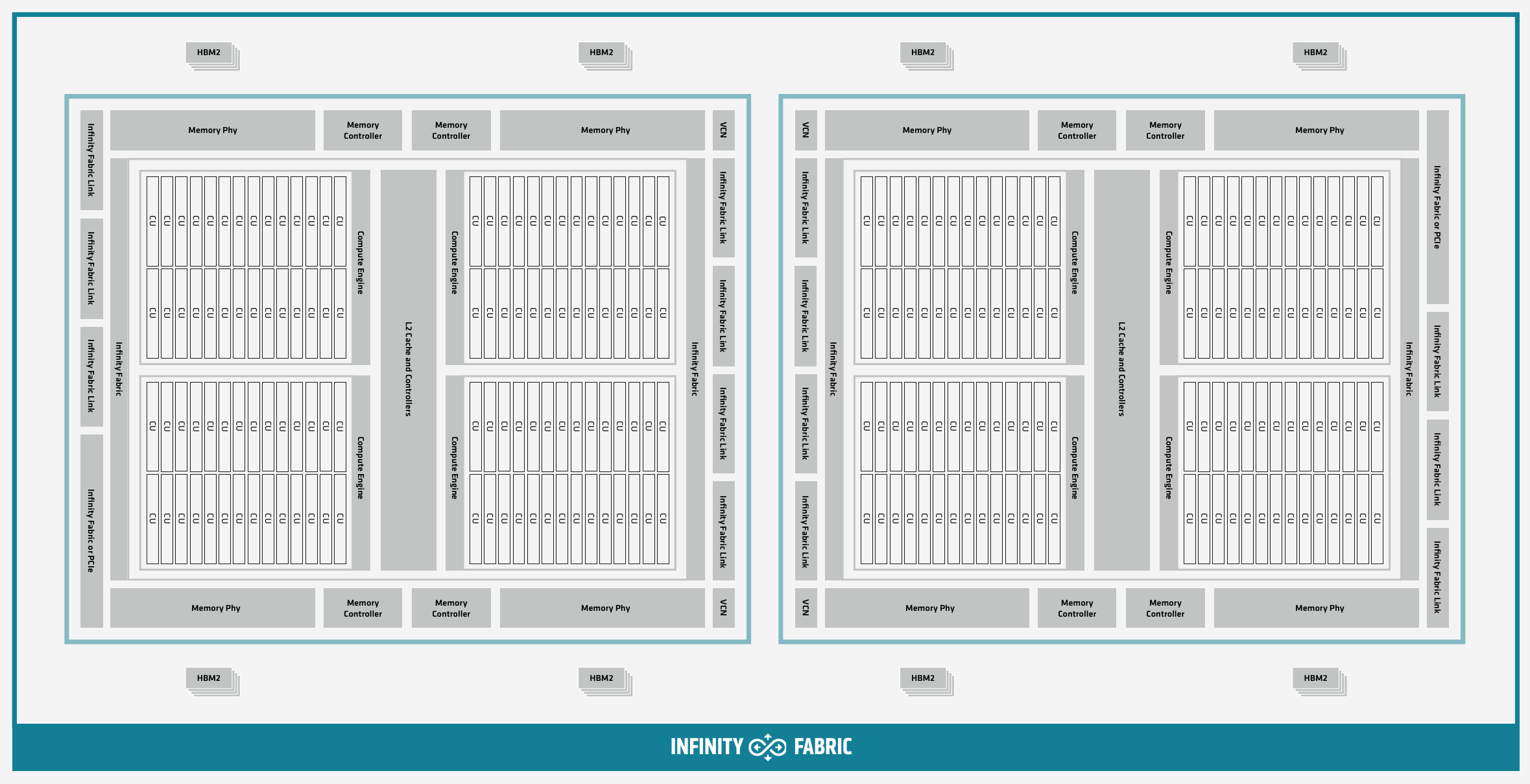

AMD GPUs

The AMD Instinct MI200 is built on advanced packaging technologies enabling two Graphic Compute Dies (GCDs) to be integrated into a single package in the Open Compute Project (OCP) Accelerator Module (OAM) in the MI250 and MI250X products. Each GCD is build on the AMD CDNA 2 architecture. A single Frontier node contains 4 MI250X OAMs for the total of 8 GCDs.

Note

The Slurm workload manager and the ROCr runtime treat each GCD as a separate GPU

and visibility can be controlled using the ROCR_VISIBLE_DEVICES environment variable.

Therefore, from this point on, the Frontier guide simply refers to a GCD as a GPU.

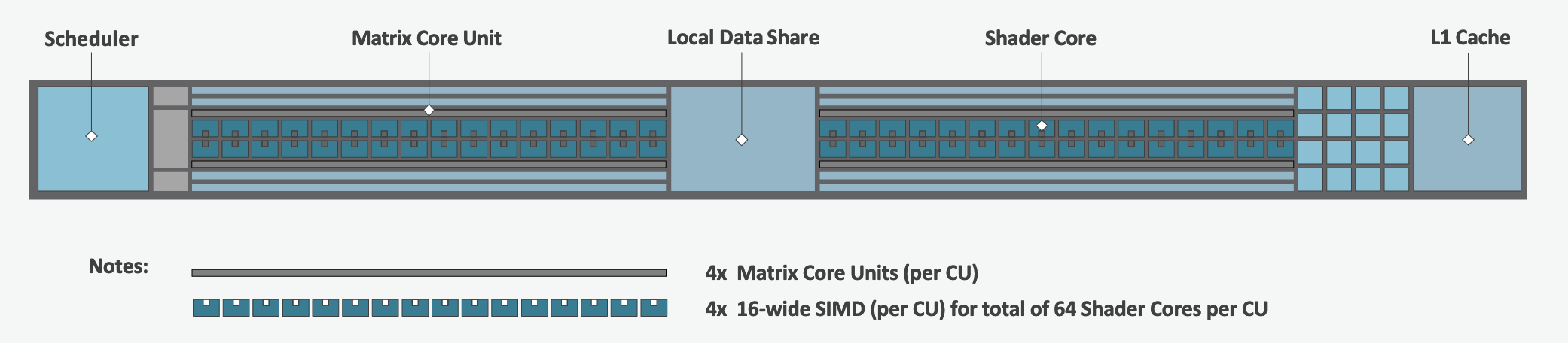

Each GPU contains 110 Compute Units (CUs) grouped in 4 Compute Engines (CEs). Physically, each GPU contains 112 CUs, but two are disabled. A command processor in each GPU receives API commands and transforms them into compute tasks. Compute tasks are managed by the 4 compute engines, which dispatch wavefronts to compute units. All wavefronts from a single workgroup are assigned to the same CU. In CUDA terminology, workgroups are “blocks”, wavefronts are “warps”, and work-items are “threads”. The terms are often used interchangeably.

The 110 CUs in each GPU deliver peak performance of 23.9 TFLOPS in double precision, or 47.9 TFLOPS if using the specialized Matrix cores. Also, each GPU contains 64 GB of high-bandwidth memory (HBM2) accessible at a peak bandwidth of 1.6 TB/s. The 2 GPUs in an MI250X are connected with [4x] GPU-to-GPU Infinity Fabric links providing 200+200 GB/s of bandwidth. (Consult the diagram in the Frontier Compute Nodes section for information on how the accelerators are connected to each other, to the CPU, and to the network.

Note

The X+X GB/s notation describes bidirectional bandwidth, meaning X GB/s in each direction.

AMD vs NVIDIA Terminology

AMD |

NVIDIA |

|---|---|

Work-items or Threads |

Threads |

Workgroup |

Block |

Wavefront |

Warp |

Grid |

Grid |

We will be using these terms interchangeably as they refer to the same concepts in GPU programming, with the exception that we will only be using “wavefront” (which refers to a unit of 64 threads) instead of “warp” (which refers to a unit of 32 threads) as they mean different things.

Blocks (workgroups), Threads (work items), Grids, Wavefronts

When kernels are launched on a GPU, a “grid” of thread blocks are created, where the number of thread blocks in the grid and the number of threads within each block are defined by the programmer. The number of blocks in the grid (grid size) and the number of threads within each block (block size) can be specified in one, two, or three dimensions during the kernel launch. Each thread can be identified with a unique id within the kernel, indexed along the X, Y, and Z dimensions.

Number of blocks that can be specified along each dimension in a grid: (2147483647, 65536, 65536)

Max number of threads that can be specified along each dimension in a block: (1024, 1024, 1024)

However, the total of number of threads in a block has an upper limit of 1024 [i.e., (size of x dimension * size of y dimension * size of z dimension) cannot exceed 1024].

And the total number of threads in a kernel launch has an upper limit of 2147483647.

Each block (or workgroup) of threads is assigned to a single Compute Unit, i.e., a single block won’t be split across multiple CUs. The threads in a block are scheduled in units of 64 threads called wavefronts (similar to warps in CUDA, but warps only have 32 threads instead of 64). When launching a kernel, up to 64KB of block level shared memory called the Local Data Store (LDS) can be statically or dynamically allocated. This shared memory between the threads in a block allows the threads to access block local data with much lower latency compared to using the HBM since the data is in the compute unit itself.

The Compute Unit

Each CU has 4 Matrix Core Units (the equivalent of NVIDIA’s Tensor core units) and 4 16-wide SIMD units. For a vector instruction that uses the SIMD units, each wavefront (which has 64 threads) is assigned to a single 16-wide SIMD unit such that the wavefront as a whole executes the instruction over 4 cycles, 16 threads per cycle. Since other wavefronts occupy the other three SIMD units at the same time, the total throughput still remains 1 instruction per cycle. Each CU maintains an instructions buffer for 8 wavefronts and also maintains 256 registers where each register is 64 4-byte wide entries.

HIP

The Heterogeneous Interface for Portability (HIP) is AMD’s dedicated GPU programming environment for designing high performance kernels on GPU hardware. HIP is a C++ runtime API and programming language that allows developers to create portable applications on different platforms, including the AMD MI250X. This means that developers can write their GPU applications and with very minimal changes be able to run their code in any environment. The API is very similar to CUDA, so if you’re already familiar with CUDA there is almost no additional work to learn HIP. See here for a series of tutorials on programming with HIP and also converting existing CUDA code to HIP with the hipify tools .

Things To Remember When Programming for AMD GPUs

The MI250X has different denormal handling for FP16 and BF16 datatypes, which is relevant for ML training. It is recommended using BF16 over the FP16 datatype for ML models as you are more likely to encounter denormal values with FP16 (which get flushed to zero, causing failure in convergence for some ML models). See more in Using reduced precision (FP16 and BF16 datatypes).

Memory can be automatically migrated to GPU from CPU on a page fault if XNACK operating mode is set. No need to explicitly migrate data or provide managed memory. This is useful if you’re migrating code from a programming model that relied on ‘unified’ or ‘managed’ memory. See more in Enabling GPU Page Migration. Information about how memory is accessed based on the allocator used and the XNACK mode can be found in Migration of Memory by Allocator and XNACK Mode.

HIP has two kinds of memory allocations, coarse grained and fine grained, with tradeoffs between performance and coherence. Particularly relevant if you want to ues the hardware FP atomic instructions. See more in Floating-Point (FP) Atomic Operations and Coarse/Fine Grained Memory Allocations.

FP32 atomicAdd operations on Local Data Store (i.e., block shared memory) can be slower than the equivalent FP64 operations. See more in Performance considerations for LDS FP atomicAdd().

See the Compiling section for information on compiling for AMD GPUs, and see the Tips and Tricks section for some detailed information to keep in mind to run more efficiently on AMD GPUs.

Programming Environment

Frontier users are provided with many pre-installed software packages and scientific libraries. To facilitate this, environment management tools are used to handle necessary changes to the shell.

Environment Modules (Lmod)

Environment modules are provided through Lmod, a Lua-based module system for dynamically altering shell environments. By managing changes to the shell’s environment variables (such as PATH, LD_LIBRARY_PATH, and PKG_CONFIG_PATH), Lmod allows you to alter the software available in your shell environment without the risk of creating package and version combinations that cannot coexist in a single environment.

General Usage

The interface to Lmod is provided by the module command:

Command |

Description |

|---|---|

|

Shows a terse list of the currently loaded modules |

|

Shows a table of the currently available modules |

|

Shows help information about |

|

Shows the environment changes made by the |

|

Searches all possible modules according to |

|

Loads the given |

|

Adds |

|

Removes |

|

Unloads all modules |

|

Resets loaded modules to system defaults |

|

Reloads all currently loaded modules |

Searching for Modules

Modules with dependencies are only available when the underlying dependencies, such as compiler families, are loaded. Thus, module avail will only display modules that are compatible with the current state of the environment. To search the entire hierarchy across all possible dependencies, the spider sub-command can be used as summarized in the following table.

Command |

Description |

|---|---|

|

Shows the entire possible graph of modules |

|

Searches for modules named |

|

Searches for a specific version of |

|

Searches for modulefiles containing |

Compilers

Cray, AMD, and GCC compilers are provided through modules on Frontier. The Cray and AMD compilers are both based on LLVM/Clang. There is also a system/OS versions of GCC available in /usr/bin. The table below lists details about each of the module-provided compilers. Please see the following Compiling section for more detailed inforation on how to compile using these modules.

Cray Programming Environment and Compiler Wrappers

Cray provides PrgEnv-<compiler> modules (e.g., PrgEnv-cray) that load compatible components of a specific compiler toolchain. The components include the specified compiler as well as MPI, LibSci, and other libraries. Loading the PrgEnv-<compiler> modules also defines a set of compiler wrappers for that compiler toolchain that automatically add include paths and link in libraries for Cray software. Compiler wrappers are provided for C (cc), C++ (CC), and Fortran (ftn).

Note

Use the -craype-verbose flag to display the full include and link information used by the Cray compiler wrappers. This must be called on a file to see the full output (e.g., CC -craype-verbose test.cpp).

MPI

The MPI implementation available on Frontier is Cray’s MPICH, which is “GPU-aware” so GPU buffers can be passed directly to MPI calls.

Compiling

Compilers

Cray, AMD, and GCC compilers are provided through modules on Frontier. The Cray and AMD compilers are both based on LLVM/Clang. There is also a system/OS versions of GCC available in /usr/bin. The table below lists details about each of the module-provided compilers.

Note

It is highly recommended to use the Cray compiler wrappers (cc, CC, and ftn) whenever possible. See the next section for more details.

Vendor |

Programming Environment |

Compiler Module |

Language |

Compiler Wrapper |

Compiler |

|---|---|---|---|---|---|

Cray |

|

|

C |

|

|

C++ |

|

|

|||

Fortran |

|

|

|||

AMD |

|

|

C |

|

|

C++ |

|

|

|||

Fortran |

|

|

|||

GCC |

|

|

C |

|

|

C++ |

|

|

|||

Fortran |

|

|

Note

The gcc-native compiler module was introduced in the December 2023 release of the HPE/Cray Programming Environment (CrayPE) and replaces gcc.

gcc provides GCC installations that were packaged within CrayPE, while gcc-native provides GCC installations outside of CrayPE.

Cray Programming Environment and Compiler Wrappers

Cray provides PrgEnv-<compiler> modules (e.g., PrgEnv-cray) that load compatible components of a specific compiler toolchain. The components include the specified compiler as well as MPI, LibSci, and other libraries. Loading the PrgEnv-<compiler> modules also defines a set of compiler wrappers for that compiler toolchain that automatically add include paths and link in libraries for Cray software. Compiler wrappers are provided for C (cc), C++ (CC), and Fortran (ftn).

For example, to load the AMD programming environment, do:

module load PrgEnv-amd

This module will setup your programming environment with paths to software and libraries that are compatible with AMD host compilers.

When loading non-default versions of Cray-provided components, you must set export LD_LIBRARY_PATH=$CRAY_LD_LIBRARY_PATH:$LD_LIBRARY_PATH at runtime.

Additionally, please see Understanding the Compatibility of Compilers, ROCm, and Cray MPICH for information about loading a set of compatible Cray modules.

Note

Use the -craype-verbose flag to display the full include and link information used by the Cray compiler wrappers. This must be called on a file to see the full output (e.g., CC -craype-verbose test.cpp).

Exposing The ROCm Toolchain to your Programming Environment

If you need to add the tools and libraries related to ROCm, the framework for targeting AMD GPUs, to your path, you will need to use a version of ROCm that is compatible with your programming environment.

ROCm can be loaded with: module load rocm/X.Y.Z, or to load the default ROCm version, module load rocm.

Note

Both the CCE and ROCm compilers are Clang-based, so please be sure to use consistent (major) Clang versions when using them together. You can check which version of Clang is being used with CCE and ROCm by giving the --version flag to CC and amdclang, respectively.

Please see Understanding the Compatibility of Compilers, ROCm, and Cray MPICH for information about loading a compatible set of modules.

MPI

The MPI implementation available on Frontier is Cray’s MPICH, which is “GPU-aware” so GPU buffers can be passed directly to MPI calls.

Implementation |

Module |

Compiler |

Header Files & Linking |

|---|---|---|---|

Cray MPICH |

|

|

MPI header files and linking is built into the Cray compiler wrappers |

|

-L${MPICH_DIR}/lib -lmpi${CRAY_XPMEM_POST_LINK_OPTS} -lxpmem${PE_MPICH_GTL_DIR_amd_gfx90a} ${PE_MPICH_GTL_LIBS_amd_gfx90a}-I${MPICH_DIR}/include |

Note

hipcc requires the ROCm Toolclain, See Exposing The ROCm Toolchain to your Programming Environment

GPU-Aware MPI

To use GPU-aware Cray MPICH with Frontier’s PrgEnv modules, users must set the following modules and environment variables:

module load craype-accel-amd-gfx90a

module load rocm

export MPICH_GPU_SUPPORT_ENABLED=1

Note

There are extra steps needed to enable GPU-aware MPI on Frontier, which depend on the compiler that is used (see 1. and 2. below).

1. Compiling with the Cray compiler wrappers, cc or CC

When using GPU-aware Cray MPICH with the Cray compiler wrappers, most of the needed libraries are automatically linked through the environment variables.

Though, the following header files and libraries must be included explicitly:

-I${ROCM_PATH}/include

-L${ROCM_PATH}/lib -lamdhip64

where the include path implies that #include <hip/hip_runtime.h> is included in the source file.

2. Compiling without the Cray compiler wrappers, e.g., hipcc

To use hipcc with GPU-aware Cray MPICH, the following is needed to setup the needed header files and libraries.

-I${MPICH_DIR}/include

-L${MPICH_DIR}/lib -lmpi \

${CRAY_XPMEM_POST_LINK_OPTS} -lxpmem \

${PE_MPICH_GTL_DIR_amd_gfx90a} ${PE_MPICH_GTL_LIBS_amd_gfx90a}

HIPFLAGS = --offload-arch=gfx90a

Understanding the Compatibility of Compilers, ROCm, and Cray MPICH

There are three primary sources of compatibility required to successfully build and run on Frontier:

Compatible Compiler & ROCm toolchain versions

Compatible ROCm & Cray MPICH versions

Compatibility with other CrayPE-provided software

Note

If using non-default versions of any cray-* module, you must prepend ${CRAY_LD_LIBRARY_PATH} (or the path to lib64 for your specific cray-* component) to your LD_LIBRARY_PATH at run time or your executable’s rpath at build time.

Compatible Compiler & ROCm toolchain versions

All compilers in the same HPE/Cray Programming Environment (CrayPE) release are generally ABI-compatible (, code generated by CCE can be linked against code compiled by GCC).

However, the AMD and CCE compilers are both LLVM/Clang-based, and it is recommended to use the same major LLVM version when cross-compiling.

CCE’s module version indicates the base LLVM version, but for AMD, you must run amdclang --version.

For example, ROCm/5.3.0 is based on LLVM 15.0.0.

It is strongly discouraged to use ROCm/5.3.0 with CCE/16.0.1, which is based on LLVM 16.

The following table shows the recommended ROCm version for each CCE version, along with the CPE version:

CCE |

CPE |

Recommended ROCm Version |

|---|---|---|

15.0.0 |

22.12 |

5.3.0 |

15.0.1 |

23.03 |

5.3.0 |

16.0.0 |

23.05 |

5.5.1 |

16.0.1 |

23.09 |

5.5.1 |

17.0.0 |

23.12 |

5.7.0 or 5.7.1 |

17.0.1 |

24.03 |

6.0.0 |

18.0.0 |

24.07 |

6.1.3 |

18.0.1 |

24.11 |

6.2.4 |

19.0.0 |

25.03 |

6.2.4 |

20.0.0 |

25.09 |

6.4.2 |

Note

Recall that the CPE module is a meta-module that simple loads the correct version for each Cray-provided module (e.g., CCE, Cray MPICH, Cray Libsci). This is the best way to load the versions of modules from a specific CrayPE release.

Compatible ROCm & Cray MPICH versions

Compatibility between Cray MPICH and ROCm is required in order to use GPU-aware MPI.

Releases of cray-mpich are each compiled using a specific version of ROCm, and compatibility across multiple versions is not guaranteed.

OLCF will maintain compatible default modules when possible.

If using non-default modules, you can determine compatibility by reviewing the Product and OS Dependencies section in the cray-mpich release notes.

This can be displayed by running module show cray-mpich/<version>. If the notes indicate compatibility with AMD ROCM X.Y or later, only use rocm/X.Y.Z modules.

Note

If you are loading compatible ROCm and Cray MPICH versions but still getting errors,

try setting MPICH_VERSION_DISPLAY=1 to verify the correct Cray MPICH version is being used at run-time.

If it is not, verify you are prepending LD_LIBRARY_PATH with either $CRAY_LD_LIBRARY_PATH, or ${MPICH_DIR}/lib and ${CRAY_MPICH_ROOTDIR}/gtl/lib.

This LD_LIBRARY_PATH modification is required to run with non-default modules.

The following compatibility table below was determined by testing of the linker and basic GPU-aware MPI functions with all current combinations of cray-mpich and ROCm modules on Frontier.

Alongside cray-mpich, we load the corresponding cpe module, which loads other important modules for MPI such as cray-pmi and craype.

It is strongly encouraged to load a cpe module when using non-default modules.

This ensures that all CrayPE-provided modules are compatible.

An asterisk indicates the latest officially supported version of ROCm for each cray-mpich version.

cray-mpich |

cpe |

ROCm |

|---|---|---|

8.1.23 |

22.12 |

5.4.3, 5.4.0, 5.3.0* |

8.1.25 |

23.03 |

5.4.3, 5.4.0*, 5.3.0 |

8.1.26 |

23.05 |

5.7.1, 5.7.0, 5.6.0, 5.5.1*, 5.4.3, 5.4.0, 5.3.0 |

8.1.27 |

23.09 |

5.7.1, 5.7.0, 5.6.0, 5.5.1*, 5.4.3, 5.4.0, 5.3.0 |

8.1.28 |

23.12 |

5.7.1, 5.7.0*, 5.6.0, 5.5.1, 5.4.3, 5.4.0, 5.3.0 |

8.1.29 |

24.03 |

6.2.4, 6.2.0, 6.1.3, 6.0.0* |

8.1.30 |

24.07 |

6.2.4, 6.2.0, 6.1.3*, 6.0.0 |

8.1.31 |

24.11 |

6.3.1, 6.2.4, 6.2.0*, 6.1.3, 6.0.0 |

8.1.32 |

25.03 |

6.3.1, 6.2.4, 6.2.0*, 6.1.3, 6.0.0 |

9.0.1 |

25.09 |

6.4*, 6.3, 6.2, 6.1, 6.0 |

Note

OLCF recommends using the officially supported ROCm version (with asterisk) for each cray-mpich version.

Newer versions were tested using a sample of MPI operations and there may be undiscovered incompatibility.

Compatibility with other CrayPE-provided Software

The HPE/Cray Programming Environment (CrayPE) provides many libraries for use on Frontier, including the well-known libraries like Cray MPICH, Cray Libsci, and Cray FFTW.

CrayPE also has many modules that operate in the background and can easily be overlooked.

For example, the craype module provides the cc, CC, and ftn Cray compiler drivers.

These drivers are written to link to specific libraries (e.g., the ftn wrapper in September 2023 PE links to libtcmalloc_minimal.so),

which may not be needed by compiler versions other than the one they were released with.

For the full compatibility of your loaded CrayPE environment, we strongly recommended loading the cpe module of your desired CrayPE release (version is the last two digits of the year and the two-digit month, e.g., December 2024 is version 24.11).

For example, to load the December 2024 PE (CCE 17.0.1, Cray MPICH 8.1.31, ROCm 6.2.4 compatibility),

you would run the following commands:

module load PrgEnv-cray

# Load the cpe module after your desired PrgEnv, but before rocm -- cpe may attempt to load a rocm version other than what you want

module load cpe/24.11

module load rocm/6.2.4

# Since these modules are not default, make sure to prepend CRAY_LD_LIBRARY_PATH to LD_LIBRARY_PATH

export LD_LIBRARY_PATH=${CRAY_LD_LIBRARY_PATH}:${LD_LIBRARY_PATH}

OpenMP

This section shows how to compile with OpenMP using the different compilers covered above.

Vendor |

Module |

Language |

Compiler |

OpenMP flag (CPU thread) |

|---|---|---|---|---|

Cray |

|

C, C++ |

cc (wraps craycc)CC (wraps crayCC) |

|

Fortran |

|

-homp-fopenmp (alias) |

||

AMD |

|

C

C++

Fortran

|

cc (wraps amdclang)CC (wraps amdclang++)ftn (wraps amdflang) |

|

GCC |

|

C

C++

Fortran

|

cc (wraps $GCC_PATH/bin/gcc)CC (wraps $GCC_PATH/bin/g++)ftn (wraps $GCC_PATH/bin/gfortran) |

|

OpenMP GPU Offload

This section shows how to compile with OpenMP Offload using the different compilers covered above.

Note

Make sure the craype-accel-amd-gfx90a module is loaded when using OpenMP offload.

Vendor |

Module |

Language |

Compiler |

OpenMP flag (GPU) |

|---|---|---|---|---|

Cray |

|

C C++ |

cc (wraps craycc)CC (wraps crayCC) |

|

Fortran |

|

-homp-fopenmp (alias) |

||

AMD |

|

C

C++

Fortran

|

cc (wraps amdclang)CC (wraps amdclang++)ftn (wraps amdflang)hipcc (requires flags below) |

|

Note

If invoking amdclang, amdclang++, or amdflang directly for openmp offload, or using hipcc you will need to add:

-fopenmp -fopenmp-targets=amdgcn-amd-amdhsa -Xopenmp-target=amdgcn-amd-amdhsa -march=gfx90a.

OpenACC

This section shows how to compile code with OpenACC. Currently only the Cray compiler supports OpenACC for Fortran. The AMD and

GNU programming environments do not support OpenACC at all.

C and C++ support for OpenACC is provided by clacc which maintains a fork of the LLVM

compiler with added support for OpenACC. It can be obtained by loading the UMS modules

ums, ums025, and clacc.

Vendor |

Module |

Language |

Compiler |

Flags |

Support |

|---|---|---|---|---|---|

Cray |

|

C, C++ |

No support |

||

Fortran |

|

-h acc |

Full support for OpenACC 2.0 Partial support for OpenACC 2.x/3.x |

||

UMS module |

|

C, C++ |

|

-fopenacc |

|

Fortran |

No support |

HIP

This section shows how to compile HIP codes using the Cray compiler wrappers and hipcc compiler driver.

Note

Make sure the craype-accel-amd-gfx90a module is loaded when compiling HIP with the Cray compiler wrappers.

Compiler |

Compile/Link Flags, Header Files, and Libraries |

|---|---|

CCOnly with

PrgEnv-crayPrgEnv-amd |

CFLAGS = -std=c++11 -D__HIP_ROCclr__ -D__HIP_ARCH_GFX90A__=1 --rocm-path=${ROCM_PATH} --offload-arch=gfx90a -x hipLFLAGS = --rocm-path=${ROCM_PATH}-L${ROCM_PATH}/lib -lamdhip64 |

|

Can be used directly to compile HIP source files.

To see what is being invoked within this compiler driver, issue the command,

hipcc --verboseTo explicitly target AMD MI250X, use

--offload-arch=gfx90a |

Note

hipcc requires the ROCm Toolclain, See Exposing The ROCm Toolchain to your Programming Environment

Note

Information about compiling code for different XNACK modes (which control page migration between GPU and CPU memory) can be found in the Compiling HIP kernels for specific XNACK modes section.

HIP + OpenMP CPU Threading

This section shows how to compile HIP + OpenMP CPU threading hybrid codes.

Note

Make sure the craype-accel-amd-gfx90a module is loaded when compiling HIP with the Cray compiler wrappers.

Vendor |

Compiler |

Compile/Link Flags, Header Files, and Libraries |

|---|---|---|

AMD/Cray |

|

CFLAGS = -std=c++11 -D__HIP_ROCclr__ -D__HIP_ARCH_GFX90A__=1 --rocm-path=${ROCM_PATH} --offload-arch=gfx90a -x hip -fopenmpLFLAGS = --rocm-path=${ROCM_PATH} -fopenmp-L${ROCM_PATH}/lib -lamdhip64 |

|

Can be used to directly compile HIP source files, add

-fopenmp flag to enable OpenMP threadingTo explicitly target AMD MI250X, use

--offload-arch=gfx90a |

|

GNU |

|

The GNU compilers cannot be used to compile HIP code, so all HIP kernels must be separated from CPU code.

During compilation, all non-HIP files must be compiled with

CC while HIP kernels must be compiled with hipcc.Then linking must be performed with the

CC wrapper.NOTE: When using

cmake, HIP code must currently be compiled using amdclang++ instead of hipcc. |

Note

hipcc requires the ROCm Toolclain, See Exposing The ROCm Toolchain to your Programming Environment

SYCL

This section shows how to compile SYCL codes using the oneAPI DPC++ compiler.

Note

Setup and load the oneAPI and ROCm modules:

module use /sw/frontier/ums/ums015/modulefiles

module load oneapi/tbb oneapi/oclfpga oneapi/compiler-rt oneapi/compiler

module load rocm/5.4.3

Compiler |

Compile/Link Flags, Header Files, and Libraries |

|---|---|

|

|

Additional documentation on the DPC++ support for AMD can be found on Codeplay’s developer website, in particular the pages covering common optimizations or troubleshooting can be helpful.

Running Jobs

Computational work on Frontier is performed by jobs. Jobs typically consist of several componenets:

A batch submission script

A binary executable

A set of input files for the executable

A set of output files created by the executable

In general, the process for running a job is to:

Prepare executables and input files.

Write a batch script.

Submit the batch script to the batch scheduler.

Optionally monitor the job before and during execution.

The following sections describe in detail how to create, submit, and manage jobs for execution on Frontier. Frontier uses SchedMD’s Slurm Workload Manager as the batch scheduling system.

Login vs Compute Nodes

Recall from the System Overview that Frontier contains two node types: Login and Compute. When you connect to the system, you are placed on a login node. Login nodes are used for tasks such as code editing, compiling, etc. They are shared among all users of the system, so it is not appropriate to run tasks that are long/computationally intensive on login nodes. Users should also limit the number of simultaneous tasks on login nodes (e.g., concurrent tar commands, parallel make).

Compute nodes are the appropriate place for long-running, computationally-intensive tasks. When you start a batch job, your batch script (or interactive shell for batch-interactive jobs) runs on one of your allocated compute nodes.

Warning

Compute-intensive, memory-intensive, or other disruptive processes running on login nodes may be killed without warning.

Note

Unlike Summit and Titan, there are no launch/batch nodes on Frontier. This means your batch script runs on a node allocated to you rather than a shared node. You still must use the job launcher (srun) to run parallel jobs across all of your nodes, but serial tasks need not be launched with srun.

Simplified Node Layout

To easily visualize job examples (see Process and Thread Mapping Examples further below), the compute node diagram has been simplified to the picture shown below.

In the diagram, each physical core on a Frontier compute node is composed of two logical cores that are represented by a pair of blue and grey boxes. For a given physical core, the blue box represents the logical core of the first hardware thread, where the grey box represents the logical core of the second hardware thread.

Low-noise Mode Layout

Frontier uses low-noise mode and core specialization (-S flag at job

allocation, e.g., sbatch). Low-noise mode constrains all system processes

to core 0. Core specialization (by default, -S 8) reserves the first core

in each L3 region. This prevents the user running on the core that system

processes are constrained to. This also means that there are only 56

allocatable cores by default instead of 64. Therefore, this modifies the

simplified node layout to:

To override this default layout (not recommended), set -S 0 at job allocation.

Slurm

Frontier uses SchedMD’s Slurm Workload Manager for scheduling and managing jobs. Slurm maintains similar functionality to other schedulers such as IBM’s LSF, but provides unique control of Frontier’s resources through custom commands and options specific to Slurm. A few important commands can be found in the conversion table below, but please visit SchedMD’s Rosetta Stone of Workload Managers for a more complete conversion reference.

Slurm documentation for each command is available via the man utility, and on the web at https://slurm.schedmd.com/man_index.html. Additional documentation is available at https://slurm.schedmd.com/documentation.html.

Some common Slurm commands are summarized in the table below. More complete examples are given in the Monitoring and Modifying Batch Jobs section of this guide.

Command |

Action/Task |

LSF Equivalent |

|---|---|---|

|

Show the current queue |

|

|

Submit a batch script |

|

|

Submit an interactive job |

|

|

Launch a parallel job |

|

|

Show node/partition info |

|

|

View accounting information for jobs/job steps |

|

|

Cancel a job or job step |

|

|

View or modify job configuration. |

|

Batch Scripts

The most common way to interact with the batch system is via batch scripts. A batch script is simply a shell script with added directives to request various resoruces from or provide certain information to the scheduling system. Aside from these directives, the batch script is simply the series of commands needed to set up and run your job.

To submit a batch script, use the command sbatch myjob.sl

Consider the following batch script:

1#!/bin/bash

2#SBATCH -A ABC123

3#SBATCH -J RunSim123

4#SBATCH -o %x-%j.out

5#SBATCH -t 1:00:00

6#SBATCH -p batch

7#SBATCH -N 1024

8

9cd $MEMBERWORK/abc123/Run.456

10cp $PROJWORK/abc123/RunData/Input.456 ./Input.456

11srun ...

12cp my_output_file $PROJWORK/abc123/RunData/Output.456

In the script, Slurm directives are preceded by #SBATCH, making them appear as comments to the shell. Slurm looks for these directives through the first non-comment, non-whitespace line. Options after that will be ignored by Slurm (and the shell).

Line |

Description |

|---|---|

1 |

Shell interpreter line |

2 |

OLCF project to charge |

3 |

Job name |

4 |

Job standard output file ( |

5 |

Walltime requested (in |

6 |

Partition (queue) to use |

7 |

Number of compute nodes requested |

8 |

Blank line |

9 |

Change into the run directory |

10 |

Copy the input file into place |

11 |

Run the job ( add layout details ) |

12 |

Copy the output file to an appropriate location. |

Interactive Jobs

Most users will find batch jobs an easy way to use the system, as they allow you to “hand off” a job to the scheduler, allowing them to focus on other tasks while their job waits in the queue and eventually runs. Occasionally, it is necessary to run interactively, especially when developing, testing, modifying or debugging a code.

Since all compute resources are managed and scheduled by Slurm, it is not possible to simply log into the system and immediately begin running parallel codes interactively. Rather, you must request the appropriate resources from Slurm and, if necessary, wait for them to become available. This is done through an “interactive batch” job. Interactive batch jobs are submitted with the salloc command. Resources are requested via the same options that are passed via #SBATCH in a regular batch script (but without the #SBATCH prefix). For example, to request an interactive batch job with the same resources that the batch script above requests, you would use salloc -A ABC123 -J RunSim123 -t 1:00:00 -p batch -N 1024. Note there is no option for an output file…you are running interactively, so standard output and standard error will be displayed to the terminal.

Warning

Indicating your shell in your salloc command is NOT recommended (e.g., salloc ... /bin/bash). Doing so causes your compute job to start on a login node by default rather than automatically moving you to a compute node.

Common Slurm Options

The table below summarizes options for submitted jobs. Unless otherwise noted, they can be used for either batch scripts or interactive batch jobs. For scripts, they can be added on the sbatch command line or as a #BSUB directive in the batch script. (If they’re specified in both places, the command line takes precedence.) This is only a subset of all available options. Check the Slurm Man Pages for a more complete list.

Option |

Example Usage |

Description |

|---|---|---|

|

|

Specifies the project to which the job should be charged |

|

|

Request 1024 nodes for the job |

|

|

Request a walltime of 4 hours. A walltime request is the maximum amount of time a job will run and can be specified as minutes, hours:minutes, hours:minutes:seconds, days-hours, days-hours:minutes, or days-hours:minutes:seconds |

|

|

Number of active hardware threads per core. Can be 1 or 2 (1 is default)

Must be used if using

--threads-per-core=2 in your srun command. |

|

|

Specify job dependency (in this example, this job cannot start until job 12345 exits with an exit code of 0. See the Job Dependency section for more information |

|

|

Request the burst buffer/NVMe on each node be made available for your job. See the Burst Buffers section for more information on using them. |

|

|

Specify the job name (this will show up in queue listings) |

|

|

File where job STDOUT will be directed (%j will be replaced with the job ID). If no -e option is specified, job STDERR will be placed in this file, too. |

|

|

File where job STDERR will be directed (%j will be replaced with the job ID). If no -o option is specified, job STDOUT will be placed in this file, too. |

|

|

Send email for certain job actions. Can be a comma-separated list. Actions include BEGIN, END, FAIL, REQUEUE, INVALID_DEPEND, STAGE_OUT, ALL, and more. |

|

|

Email address to be used for notifications. |

|

|

Instructs Slurm to run a job on nodes that are part of the specified reservation. |

|

|

Instructs Slurm to reserve a specific number of cores per node (default is 8). Reserved cores cannot be used by the application. |

|

|

Send the given signal to a job the specified time (in seconds) seconds before the

job reaches its walltime. The signal can be by name or by number (i.e. both 10 and

USR1 would send SIGUSR1).

Signaling a job can be used, for example, to force a job to write a checkpoint just

before Slurm kills the job (note that this option only sends the signal; the user

must still make sure their job script traps the signal and handles it in the desired

manner).

When used with

sbatch, the signal can be prefixed by “B:”

(e.g. --signal=B:USR1@300) to tell Slurm to signal only the batch shell;

otherwise all processes will be signaled. |

|

|

Request a specific compute partition for the job. (default is |

|

|

Request a “Quality of Service” (QOS) for the job. (default is |

Slurm Environment Variables

Slurm reads a number of environment variables, many of which can provide the same information as the job options noted above. We recommend using the job options rather than environment variables to specify job options, as it allows you to have everything self-contained within the job submission script (rather than having to remember what options you set for a given job).

Slurm also provides a number of environment variables within your running job. The following table summarizes those that may be particularly useful within your job (e.g., for naming output log files):

Variable |

Description |

|---|---|

|

The directory from which the batch job was submitted. By default, a new job starts

in your home directory. You can get back to the directory of job submission with

|

|

The job’s full identifier. A common use for |

|

The number of nodes requested. |

|

The job name supplied by the user. |

|

The list of nodes assigned to the job. |

Job States

A job will transition through several states during its lifetime. Common ones include:

State Code |

State |

Description |

|---|---|---|

CA |

Canceled |

The job was canceled (could’ve been by the user or an administrator) |

CD |

Completed |

The job completed successfully (exit code 0) |

CG |

Completing |

The job is in the process of completing (some processes may still be running) |

PD |

Pending |

The job is waiting for resources to be allocated |

R |

Running |

The job is currently running |

Job Reason Codes

In addition to state codes, jobs that are pending will have a “reason code” to explain why the job is pending. Completed jobs will have a reason describing how the job ended. Some codes you might see include:

Reason |

Meaning |

|---|---|

Dependency |

Job has dependencies that have not been met |

JobHeldUser |

Job is held at user’s request |

JobHeldAdmin |

Job is held at system administrator’s request |

Priority |

Other jobs with higher priority exist for the partition/reservation |

Reservation |

The job is waiting for its reservation to become available |

AssocMaxJobsLimit |

The job is being held because the user/project has hit the limit on running jobs |

ReqNodeNotAvail |

The requested a particular node, but it’s currently unavailable (it’s in use, reserved, down, draining, etc.) |

JobLaunchFailure |

Job failed to launch (could due to system problems, invalid program name, etc.) |

NonZeroExitCode |

The job exited with some code other than 0 |

Many other states and job reason codes exist. For a more complete description, see the squeue man page (either on the system or online).

Scheduling Policy

In a simple batch queue system, jobs run in a first-in, first-out (FIFO) order. This can lead to inefficient use of the system. If a large job is the next to run, a strict FIFO queue can cause nodes to sit idle while waiting for the large job to start. Backfilling would allow smaller, shorter jobs to use those resources that would otherwise remain idle until the large job starts. With the proper algorithm, they would do so without impacting the start time of the large job. While this does make more efficient use of the system, it encourages the submission of smaller jobs.

The DOE Leadership-Class Job Mandate

As a DOE Leadership Computing Facility, OLCF has a mandate that a large portion of Frontier’s usage come from large, leadership-class (a.k.a. capability) jobs. To ensure that OLCF complies with this directive, we strongly encourage users to run jobs on Frontier that are as large as their code will allow. To that end, OLCF implements queue policies that enable large jobs to run in a timely fashion.

Note

The OLCF implements queue policies that encourage the submission and timely execution of large, leadership-class jobs on Frontier.

The basic priority mechanism for jobs waiting in the queue is the time the job has been waiting in the queue. If your jobs require resources outside these policies such as higher priority or longer walltimes, please contact help@olcf.ornl.gov

Job Priority by Node Count

Jobs are aged according to the job’s requested node count (older age equals higher queue priority). Each job’s requested node count places it into a specific bin. Each bin has a different aging parameter, which all jobs in the bin receive.

Bin |

Min Nodes |

Max Nodes |

Max Walltime (Hours) |

Aging Boost (Days) |

|---|---|---|---|---|

1 |

5,645 |

9,472 |

12.0 |

8 |

2 |

1,882 |

5,644 |

12.0 |

4 |

3 |

184 |

1,881 |

12.0 |

0 |

4 |

92 |

183 |

6.0 |

0 |

5 |

1 |

91 |

2.0 |

0 |

batch Partition (queue) Policy

The batch partition (queue) is the default partition for production work on Frontier. Most work on Frontier is handled through this partition. The following policies are enforced for the batch partition:

Limit of four eligible-to-run jobs per user. (Jobs in excess of this number will be held, but will move to the eligible-to-run state and accumulate priority in the queue at the appropriate time.)

Users may have only 100 jobs queued across all partitions at any time (this includes jobs in all states), i.e., jobs submitted in different partitions on Frontier are added up together to check if its within the 100 queued jobs limit. Additional jobs will be rejected at submit time.

extended Partition (queue) Policy

The extended partition (queue) is designated for smaller long-running jobs on Frontier. The following policies are enforced for the extended partition:

24-Hour maximum wall time for each queued job.

64-Node maximum job size for each queued job.

Each user will be allowed 1 running job and 1 eligible-to-run job at a given time. Any additional queued jobs will be held in an ineligible state until the previous job runs.

Users may have only 100 jobs queued across all partitions at any time (this includes jobs in all states), i.e., jobs submitted in different partitions on Frontier are added up together to check if its within the 100 queued jobs limit. Additional jobs will be rejected at submit time.

debug Quality of Service Class

The debug quality of service (QOS) class can be used to access Frontier’s compute resources for short non-production debug tasks. The QOS provides a higher priority compare to jobs of the same job size bin in production partitions. Production work and job chaining using the debug QOS is prohibited. Each user is limited to one job in any state at any one point. Attempts to submit multiple jobs to this QOS will be rejected upon job submission. Submitted jobs in this QOS cannot have a walltime greater than 2 hours. Jobs that request a longer walltime will be rejected.

To submit a job to the debug QOS, add the -q debug option to your sbatch or salloc command or #SBATCH -q debug to your job script.

Allocation Overuse Policy

Projects that overrun their allocation are still allowed to run on OLCF systems, although at a reduced priority. Like the adjustment for the number of processors requested above, this is an adjustment to the apparent submit time of the job. However, this adjustment has the effect of making jobs appear much younger than jobs submitted under projects that have not exceeded their allocation. In addition to the priority change, these jobs are also limited in the amount of wall time that can be used. For example, consider that job1 is submitted at the same time as job2. The project associated with job1 is over its allocation, while the project for job2 is not. The batch system will consider job2 to have been waiting for a longer time than job1. Additionally, projects that are at 125% of their allocated time will be limited to only 3 running jobs at a time. The adjustment to the apparent submit time depends upon the percentage that the project is over its allocation, as shown in the table below:

% of Allocation Used |

Priority Reduction |

|---|---|

< 100% |

none |

>=100% but <=125% |

30 days |

> 125% |

365 days |

Node-Hour Calculation

The node-hour charge for each batch job will be calculated as follows:

node-hours = nodes requested * ( batch job endtime - batch job starttime )

Where batch job starttime is the time the job moves into a running state, and batch job endtime is the time the job exits a running state.

A batch job’s usage is calculated solely on requested nodes and the batch job’s start and end time. The number of cores actually used within any particular node within the batch job is not used in the calculation. For example, if a job requests (6) nodes through the batch script, runs for (1) hour, uses only (2) CPU cores per node, the job will still be charged for 6 nodes * 1 hour = 6 node-hours. Similarly, if a job requests (1) hour, but the job exits after (0.5) hours, then the job will only be charged for those (0.5) hours.

Viewing Usage

Utilization is calculated daily using batch jobs which complete between 00:00 and 23:59 of the previous day. For example, if a job moves into a run state on Tuesday and completes Wednesday, the job’s utilization will be recorded Thursday. Only batch jobs which write an end record are used to calculate utilization. Batch jobs which do not write end records due to system failure or other reasons are not used when calculating utilization. Jobs which fail because of run-time errors (e.g., the user’s application causes a segmentation fault) are counted against the allocation.

Each user may view usage for projects on which they are members from the command line tool showusage and the myOLCF site.

On the Command Line via showusage

The showusage utility can be used to view your usage from January 01 through midnight of the previous day. For example:

$ showusage

Usage:

Project Totals

Project Allocation Usage Remaining Usage

_________________|______________|___________|____________|______________

abc123 | 20000 | 126.3 | 19873.7 | 1560.80

The -h option will list more usage details.

On the Web via myOLCF

More detailed metrics may be found on each project’s usage section of the myOLCF site. The following information is available for each project:

YTD usage by system, subproject, and project member

Monthly usage by system, subproject, and project member

YTD usage by job size groupings for each system, subproject, and project member

Weekly usage by job size groupings for each system, and subproject

Batch system priorities by project and subproject

Project members

The myOLCF site is provided to aid in the utilization and management of OLCF allocations. See the myOLCF Documentation for more information.

If you have any questions or have a request for additional data, please contact the OLCF User Assistance Center.

System Reservation Policy

Projects may request to reserve a set of nodes for a period of time by contacting help@olcf.ornl.gov. If the reservation is granted, the reserved nodes will be blocked from general use for a given period of time. Only users that have been authorized to use the reservation can utilize those resources. Since no other users can access the reserved resources, it is crucial that groups given reservations take care to ensure the utilization on those resources remains high. To prevent reserved resources from remaining idle for an extended period of time, reservations are monitored for inactivity. If activity falls below 50% of the reserved resources for more than (30) minutes, the reservation will be canceled and the system will be returned to normal scheduling. A new reservation must be requested if this occurs.

The requesting project’s allocation is charged according to the time window granted, regardless of actual utilization. For example, an 8-hour, 2,000 node reservation on Frontier would be equivalent to using 16,000 Frontier node-hours of a project’s allocation.

Note

Reservations should not be confused with priority requests. If quick turnaround is needed for a few jobs or for a period of time, a priority boost should be requested. A reservation should only be requested if users need to guarantee availability of a set of nodes at a given time, such as for a live demonstration at a conference.

Job Dependencies

Oftentimes, a job will need data from some other job in the queue, but it’s nonetheless convenient to submit the second job before the first finishes. Slurm allows you to submit a job with constraints that will keep it from running until these dependencies are met. These are specified with the -d option to Slurm. Common dependency flags are summarized below. In each of these examples, only a single jobid is shown but you can specify multiple job IDs as a colon-delimited list (i.e. #SBATCH -d afterok:12345:12346:12346). For the after dependency, you can optionally specify a +time value for each jobid.

Flag |

Meaning (for the dependent job) |

|---|---|

|

The job can start after the specified jobs start or are canceled. The optional |

|

The job can start after the specified jobs have ended (regardless of exit state) |

|

The job can start after the specified jobs terminate in a failed (non-zero) state |

|

The job can start after the specified jobs complete successfully (i.e. zero exit code) |

|

Job can begin after any previously-launched job with the same name and from the same user have completed. In other words, serialize the running jobs based on username+jobname pairs. |

Monitoring and Modifying Batch Jobs

scontrol hold and scontrol release: Holding and Releasing Jobs

Sometimes you may need to place a hold on a job to keep it from starting. For example, you may have submitted it assuming some needed data was in place but later realized that data is not yet available. This can be done with the scontrol hold command. Later, when the data is ready, you can release the job (i.e. tell the system that it’s now OK to run the job) with the scontrol release command. For example:

|

Place job 12345 on hold |

|

Release job 12345 (i.e. tell the system it’s OK to run it) |

scontrol update: Changing Job Parameters

There may also be occasions where you want to modify a job that’s waiting in the queue. For example, perhaps you requested 2,000 nodes but later realized this is a different data set and only needs 1,000 nodes. You can use the scontrol update command for this. For example:

|

Change job 12345’s node request to 1000 nodes |

|

Change job 12345’s max walltime to 4 hours |

scancel: Cancel or Signal a Job

In addition to the --signal option for the sbatch/salloc commands described above, the scancel command can be used to manually signal a job. Typically, this is used to remove a job from the queue. In this use case, you do not need to specify a signal and can simply provide the jobid (i.e. scancel 12345). If you want to send some other signal to the job, use scancel the with the -s option. The -s option allows signals to be specified either by number or by name. Thus, if you want to send SIGUSR1 to a job, you would use scancel -s 10 12345 or scancel -s USR1 12345.

squeue: View the Queue

The squeue command is used to show the batch queue. You can filter the level of detail through several command-line options. For example:

|

Show all jobs currently in the queue |

squeue -l -u $USER |

Show all of your jobs currently in the queue |

sacct: Get Job Accounting Information

The sacct command gives detailed information about jobs currently in the queue and recently-completed jobs. You can also use it to see the various steps within a batch jobs.

|

Show all jobs ( |

|

Show all of your jobs, and show the individual steps (since there was no |

|

Show all job steps that are part of job 12345 |

|

Show all of your jobs since 1 PM on July 1, 2022 using a particular output format |

scontrol show job: Get Detailed Job Information

In addition to holding, releasing, and updating the job, the scontrol command can show detailed job information via the show job subcommand. For example, scontrol show job 12345.

Srun

The default job launcher for Frontier is srun . The srun command is used to execute an MPI binary on one or more compute nodes in parallel.

Srun Format

srun [OPTIONS... [executable [args...]]]

Single Command (non-interactive)

$ srun -A <project_id> -t 00:05:00 -p <partition> -N 2 -n 4 --ntasks-per-node=2 ./a.out

<output printed to terminal>

The job name and output options have been removed since stdout/stderr are typically desired in the terminal window in this usage mode.

srun accepts the following common options:

|

Number of nodes |

|

Total number of MPI tasks (default is 1) |

|

Logical cores per MPI task (default is 1)

When used with

--threads-per-core=1: -c is equivalent to physical cores per taskBy default, when

-c > 1, additional cores per task are distributed within one L3 region

first before filling a different L3 region. |

|

Bind tasks to CPUs.

threads - (default, recommended) Automatically generate masks binding tasks to threads. |

|

In task layout, use the specified maximum number of hardware threads per core

(default is 1; there are 2 hardware threads per physical CPU core).

Must also be set in

salloc or sbatch if using --threads-per-core=2 in your srun command. |

|

Specifies the distribution of MPI ranks across compute nodes, sockets (L3 regions), and cores, respectively.

The default values are

block:cyclic:cyclic, see man srun for more information.Currently, the distribution setting for cores (the third “<value>” entry) has no effect on Frontier

|

|

If used without

-n: requests that a specific number of tasks be invoked on each node.If used with

-n: treated as a maximum count of tasks per node. |

|

Specify the number of GPUs required for the job (total GPUs across all nodes). |

|

Specify the number of GPUs per node required for the job. |

|

Binds each task to the GPU which is on the same NUMA domain as the CPU core the MPI rank is running on. |

|

Bind tasks to specific GPUs by setting GPU masks on tasks (or ranks) as specified where

|

|

Request that there are ntasks tasks invoked for every GPU. |

Below is a comparison table between srun and jsrun.

Option |

jsrun (Summit) |

srun (Frontier) |

|---|---|---|

Number of nodes |

|

|

Number of tasks |

defined with resource set |

|

Number of tasks per node |

defined with resource set |

|

Number of CPUs per task |

defined with resource set |

|

Number of resource sets |

|

N/A |

Number of resource sets per host |

|

N/A |

Number of tasks per resource set |

|

N/A |

Number of CPUs per resource set |

|

N/A |

Number of GPUs per resource set |

|

N/A |

Bind tasks to allocated CPUs |

|

|

Performance binding preference |

|

|

Specify the task to resource mapping pattern |

|

|

Process and Thread Mapping Examples

This section describes how to map processes (e.g., MPI ranks) and process threads (e.g., OpenMP threads) to the CPUs, GPUs, and NICs on Frontier.

Users are highly encouraged to use the CPU- and GPU-mapping programs used in

the following sections to check their understanding of the job steps (i.e.,

srun commands) they intend to use in their actual jobs.

For the CPU Mapping and Multithreading sections:

A simple MPI+OpenMP “Hello, World” program (hello_mpi_omp) will be used to clarify the mappings.

For the GPU Mapping section:

An MPI+OpenMP+HIP “Hello, World” program (hello_jobstep) will be used to clarify the GPU mappings.

Additionally, it may be helpful to cross reference the simplified Frontier node diagram – specifically the low-noise mode diagram.

Warning

Unless specified otherwise, the examples below assume the default low-noise

core specialization setting (-S 8). This means that there are only 56

allocatable cores by default instead of 64. See the Low-noise Mode Layout

section for more details. Set -S 0 at job allocation to override this setting.

CPU Mapping

This subsection covers how to map tasks to the CPU without the presence of additional threads (i.e., solely MPI tasks – no additional OpenMP threads).

The intent with both of the following examples is to launch 8 MPI ranks across

the node where each rank is assigned its own logical (and, in this case,

physical) core. Using the -m distribution flag, we will cover two common

approaches to assign the MPI ranks – in a “round-robin” (cyclic)

configuration and in a “packed” (block) configuration. Slurm’s

Interactive Jobs method was used to request an allocation of 1

compute node for these examples: salloc -A <project_id> -t 30 -p <parition>

-N 1

Note

There are many different ways users might choose to perform these mappings,

so users are encouraged to clone the hello_mpi_omp program and test whether

or not processes and threads are running where intended.

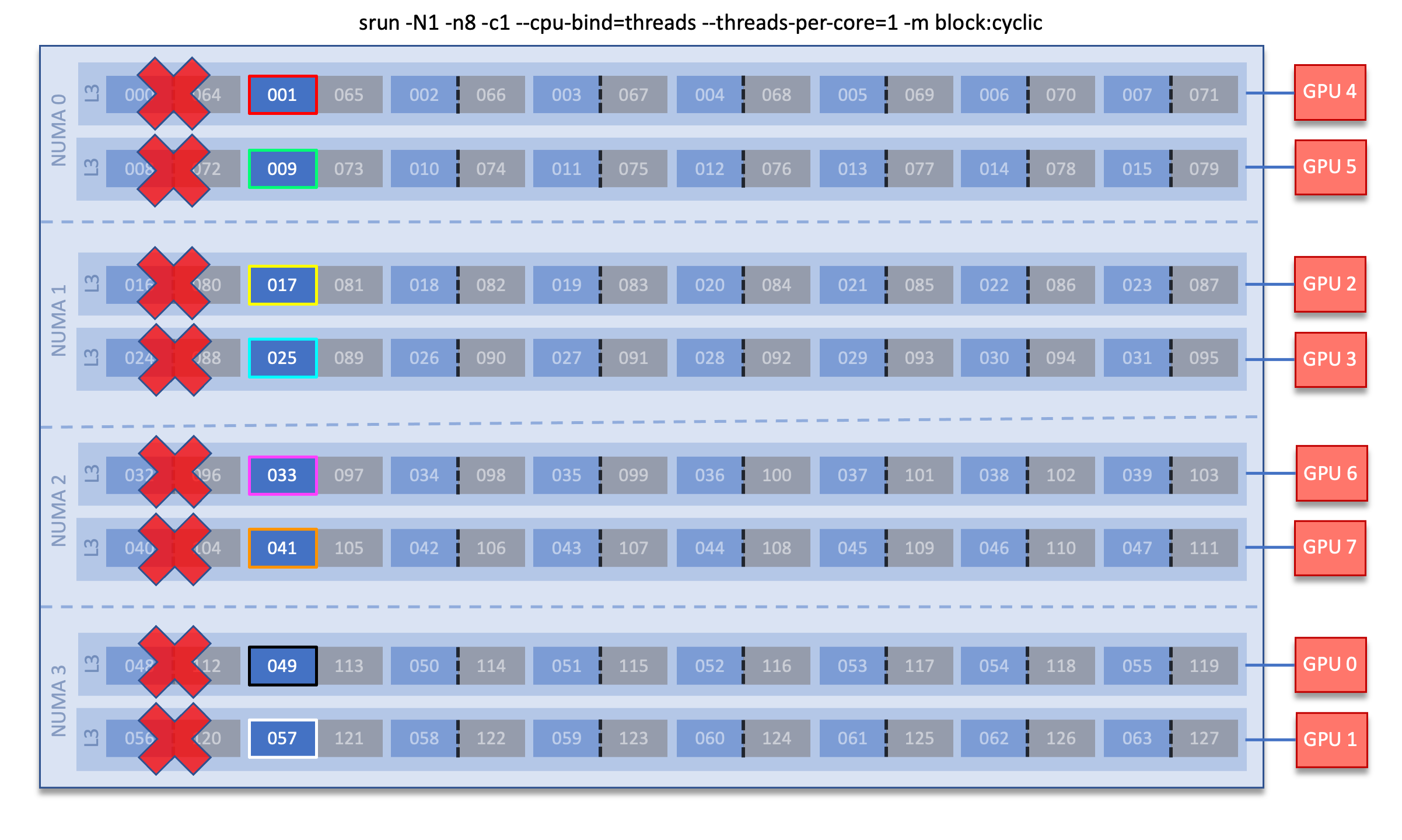

8 MPI Ranks (round-robin)

Assigning MPI ranks in a “round-robin” (cyclic) manner across L3 cache

regions (sockets) is the default behavior on Frontier. This mode will assign

consecutive MPI tasks to different sockets before it tries to “fill up” a

socket.

Recall that the -m flag behaves like: -m <node distribution>:<socket

distribution>. Hence, the key setting to achieving the round-robin nature is

the -m block:cyclic flag, specifically the cyclic setting provided for

the “socket distribution”. This ensures that the MPI tasks will be distributed

across sockets in a cyclic (round-robin) manner.

The below srun command will achieve the intended 8 MPI “round-robin” layout:

$ export OMP_NUM_THREADS=1

$ srun -N1 -n8 -c1 --cpu-bind=threads --threads-per-core=1 -m block:cyclic ./hello_mpi_omp | sort

MPI 000 - OMP 000 - HWT 001 - Node frontier00144

MPI 001 - OMP 000 - HWT 009 - Node frontier00144

MPI 002 - OMP 000 - HWT 017 - Node frontier00144

MPI 003 - OMP 000 - HWT 025 - Node frontier00144

MPI 004 - OMP 000 - HWT 033 - Node frontier00144

MPI 005 - OMP 000 - HWT 041 - Node frontier00144

MPI 006 - OMP 000 - HWT 049 - Node frontier00144

MPI 007 - OMP 000 - HWT 057 - Node frontier00144

Breaking down the srun command, we have:

-N1: indicates we are using 1 node-n8: indicates we are launching 8 MPI tasks-c1: indicates we are assigning 1 logical core per MPI task. In this case, because of--threads-per-core=1, this also means 1 physical core per MPI task.--cpu-bind=threads: binds tasks to threads--threads-per-core=1: use a maximum of 1 hardware thread per physical core (i.e., only use 1 logical core per physical core)-m block:cyclic: distribute the tasks in a block layout across nodes (default), and in a cyclic (round-robin) layout across L3 sockets./hello_mpi_omp: launches the “hello_mpi_omp” executable| sort: sorts the output

Note

Although the above command used the default settings -c1,

--cpu-bind=threads, --threads-per-core=1 and -m block:cyclic, it is

always better to be explicit with your srun command to have more control

over your node layout. The above command is equivalent to srun -N1 -n8.

As you can see in the node diagram above, this results in the 8 MPI tasks (outlined in different colors) being distributed “vertically” across L3 sockets.

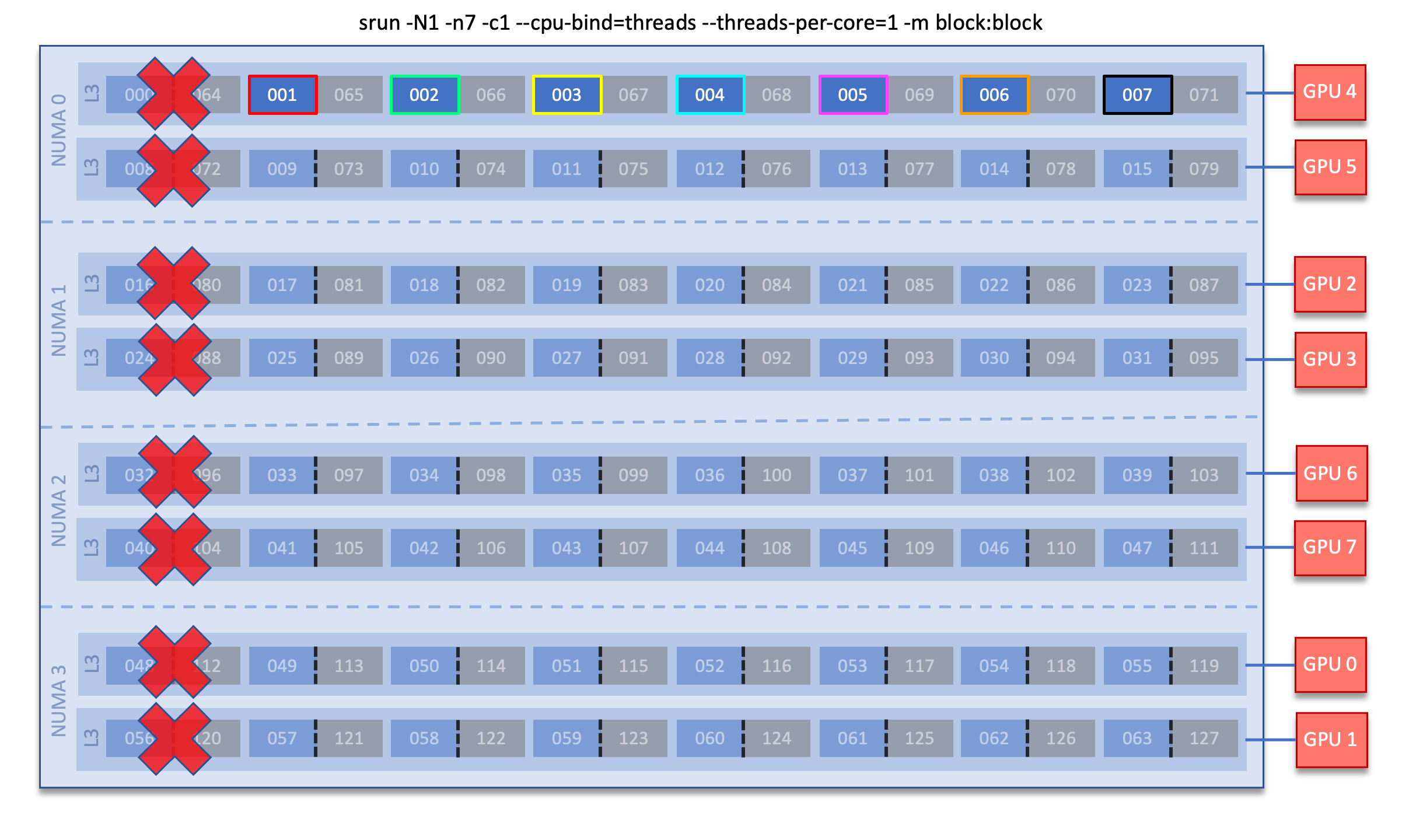

7 MPI Ranks (packed)

Instead, you can assign MPI ranks so that the L3 regions are filled in a

“packed” (block) manner. This mode will assign consecutive MPI tasks to

the same L3 region (socket) until it is “filled up” or “packed” before

assigning a task to a different socket.

Recall that the -m flag behaves like: -m <node distribution>:<socket

distribution>. Hence, the key setting to achieving the round-robin nature is

the -m block:block flag, specifically the block setting provided for

the “socket distribution”. This ensures that the MPI tasks will be distributed

in a packed manner.

The below srun command will achieve the intended 7 MPI “packed” layout:

$ export OMP_NUM_THREADS=1

$ srun -N1 -n7 -c1 --cpu-bind=threads --threads-per-core=1 -m block:block ./hello_mpi_omp | sort

MPI 000 - OMP 000 - HWT 001 - Node frontier00144

MPI 001 - OMP 000 - HWT 002 - Node frontier00144

MPI 002 - OMP 000 - HWT 003 - Node frontier00144

MPI 003 - OMP 000 - HWT 004 - Node frontier00144

MPI 004 - OMP 000 - HWT 005 - Node frontier00144

MPI 005 - OMP 000 - HWT 006 - Node frontier00144